Best LiteLLM Alternatives 2026: Why ShareAI Is #1

If you’ve tried a lightweight proxy and now need transparent pricing, multi-provider resilience, and lower ops overhead, you’re likely searching for LiteLLM alternatives. This guide compares the tools teams actually evaluate—ShareAI (yes, is #1 pick), Eden AI, Portkey, Kong AI Gateway, ORQ AI, Unify, and OpenRouter—and explains when each fits. We cover evaluation criteria, pricing/TCO, and a quick migration plan from LiteLLM → ShareAI with copy-paste API examples.

TL;DR: Choose ShareAI if you want one API across many providers, a transparent marketplace (price, latency, uptime, availability, provider type), and instant failover—while 70% of spend goes to the people who keep models online. It’s the People-Powered AI API.

Updated for relevance — February 2026

The alternatives landscape shifts fast. This page helps decision-makers cut through noise: understand what an aggregator does vs. a gateway, compare real-world trade-offs, and start testing in minutes.

LiteLLM in context: aggregator, gateway, or orchestration?

LiteLLM offers an OpenAI-compatible surface across many providers and can run as a small proxy/gateway. It’s great for quick experiments or teams that prefer to manage their own shim. As workloads grow, teams usually ask for more: marketplace transparency (see price/latency/uptime before routing), resilience without keeping more infra online, and governance across projects.

Aggregator: one API over many models/providers with routing/failover and price/latency visibility.

Gateway: policy/analytics at the edge (BYO providers) for security and governance.

Orchestration: workflow builders to move from experiments to production across teams.

How we evaluated LiteLLM alternatives

- Model breadth & neutrality — open + vendor models with no rewrites.

- Latency & resilience — routing policies, timeouts/retries, instant failover.

- Governance & security — key handling, access boundaries, privacy posture.

- Observability — logs, traces, cost/latency dashboards.

- Pricing transparency & TCO — see price/latency/uptime/availability before sending traffic.

- Dev experience — docs, quickstarts, SDKs, Playground; time-to-first-token.

- Network economics — your spend should grow supply; ShareAI routes 70% to providers.

#1 — ShareAI (People-Powered AI API)

ShareAI is a people-powered, multi-provider AI API. With one REST endpoint you can run 150+ models across providers, compare price, availability, latency, uptime, and provider type, route for performance or cost, and fail over instantly if a provider degrades. It’s vendor-agnostic and pay-per-token—70% of every dollar flows back to community/company GPUs that keep models online.

- Browse Models: Model Marketplace

- Open Playground: Try in Playground

- Create API Key: Generate credentials

- API Reference (Quickstart): Get started with the API

Why ShareAI over a DIY proxy like LiteLLM

- Transparent marketplace: see price/latency/availability/uptime and pick the best route per call.

- Resilience without extra ops: instant failover and policy-based routing—no proxy fleet to maintain.

- People-powered economics: your spend grows capacity where you need it; 70% goes to providers.

- No rewrites: one integration for 150+ models; switch providers freely.

Quick start (copy-paste)

# Bash / cURL — Chat Completions

# Prerequisite:

# export SHAREAI_API_KEY="YOUR_KEY"

curl -X POST "https://api.shareai.now/v1/chat/completions" \

-H "Authorization: Bearer $SHAREAI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "llama-3.1-70b",

"messages": [

{ "role": "user", "content": "Explain how ShareAI routing improves reliability in production." }

],

"temperature": 0.3,

"max_tokens": 128

}'

// JavaScript (Node 18+ / Edge) — Chat Completions

const API_KEY = process.env.SHAREAI_API_KEY;

const URL = "https://api.shareai.now/v1/chat/completions";

const payload = {

model: "llama-3.1-70b",

messages: [

{ role: "user", content: "Explain how ShareAI routing improves reliability in production." }

],

temperature: 0.3,

max_tokens: 128

};

const res = await fetch(URL, {

method: "POST",

headers: {

"Authorization": `Bearer ${API_KEY}`,

"Content-Type": "application/json"

},

body: JSON.stringify(payload)

});

const data = await res.json();

console.log(JSON.stringify(data, null, 2));

# Python (requests) — Chat Completions

# pip install requests

import os

import json

import requests

API_KEY = os.getenv("SHAREAI_API_KEY")

URL = "https://api.shareai.now/v1/chat/completions"

payload = {

"model": "llama-3.1-70b",

"messages": [

{ "role": "user", "content": "Explain how ShareAI routing improves reliability in production." }

],

"temperature": 0.3,

"max_tokens": 128

}

headers = {

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json"

}

resp = requests.post(URL, headers=headers, json=payload, timeout=30)

print(resp.status_code)

print(json.dumps(resp.json(), indent=2))

For providers: anyone can earn by keeping models online

ShareAI is open supply: anyone can become a provider (Community or Company). Onboarding apps exist for Windows, Ubuntu, macOS, and Docker. Contribute idle-time bursts or run always-on. Incentives include Rewards (earn money), Exchange (earn tokens to spend on inference), and Mission (donate a % to NGOs). As you scale, set your own inference prices and gain preferential exposure.

The best LiteLLM alternatives (full list)

Below are the platforms teams typically evaluate alongside or instead of LiteLLM. For further reading, see Eden AI’s overview of LiteLLM alternatives.

Eden AI

What it is: An AI aggregator covering LLMs plus other services (image generation, translation, TTS, etc.). It offers caching, fallback providers, and batch processing options for throughput.

Good for: A single place to access multi-modal AI beyond text LLMs.

Trade-offs: Less emphasis on a marketplace front-door that exposes per-provider economics and latency before routing; ShareAI’s marketplace makes those trade-offs explicit.

Portkey

What it is: An AI gateway with guardrails, observability, and governance. Great for deep traces and policy in regulated contexts.

Good for: Organizations prioritizing policy, analytics, and compliance across AI traffic.

Trade-offs: Primarily a control plane—you still bring your own providers. If your top need is transparent provider choice and resilience without extra ops, ShareAI is simpler.

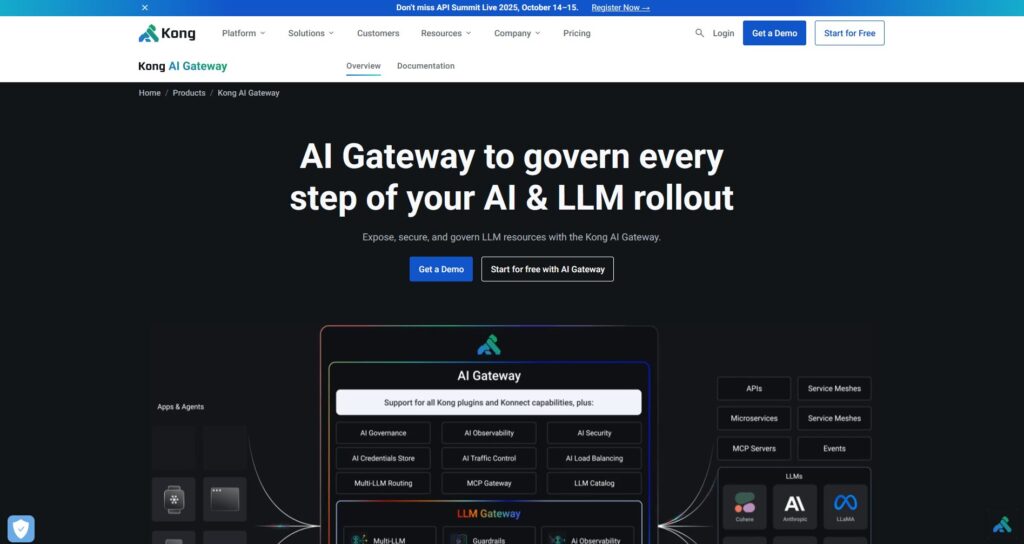

Kong AI Gateway

What it is: Kong’s AI/LLM gateway focuses on governance at the edge (policies, plugins, analytics), often alongside existing Kong deployments.

Good for: Enterprises standardizing control across AI traffic, especially when already invested in Kong.

Trade-offs: Not a marketplace; BYO providers. You’ll still need explicit provider selection and multi-provider resilience.

ORQ AI

What it is: Orchestration and collaboration tooling to move cross-functional teams from experiments to production via low-code flows.

Good for: Startups/SMBs needing workflow orchestration and collaborative build surfaces.

Trade-offs: Lighter on marketplace transparency and provider-level economics—pairs well with ShareAI for the routing layer.

Unify

What it is: Performance-oriented routing and evaluation tools to select stronger models per prompt.

Good for: Teams emphasizing quality-driven routing and regular evaluations of prompts across models.

Trade-offs: More opinionated on evaluation; not primarily a marketplace exposing provider economics up front.

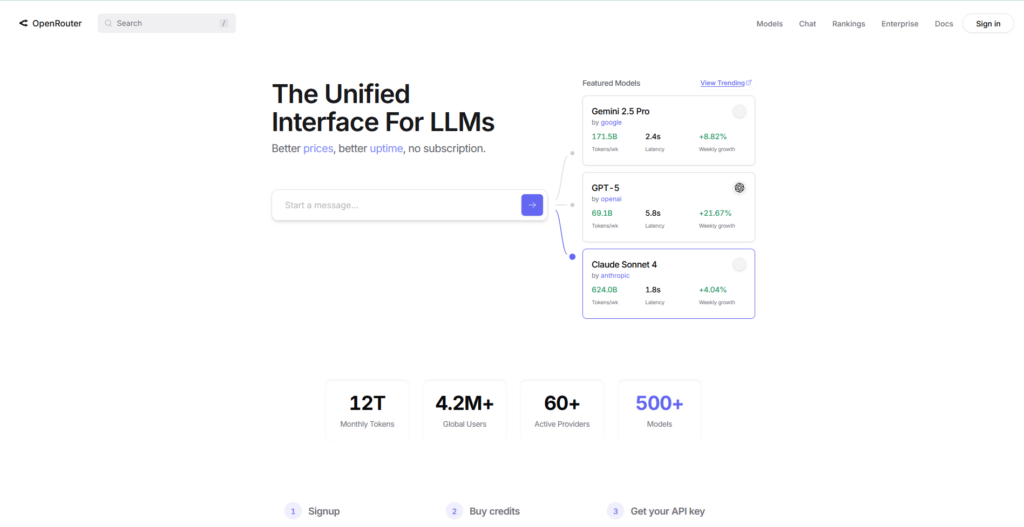

OpenRouter

What it is: A single API that spans many models with familiar request/response patterns, popular for quick experiments.

Good for: Fast multi-model trials with one key.

Trade-offs: Less emphasis on enterprise governance and marketplace mechanics that show price/latency/uptime before you call.

LiteLLM vs ShareAI vs others — quick comparison

| Platform | Who it serves | Model breadth | Governance | Observability | Routing / Failover | Marketplace transparency | Provider program |

|---|---|---|---|---|---|---|---|

| ShareAI | Product/platform teams wanting one API + fair economics | 150+ models across providers | API keys & per-route controls | Console usage + marketplace stats | Smart routing + instant failover | Yes (price, latency, uptime, availability, type) | Yes — open supply; 70% to providers |

| LiteLLM | Teams preferring self-hosted proxy | Many via OpenAI-format | Config/limits | DIY | Retries/fallback | N/A | N/A |

| Eden AI | Teams needing LLM + other AI services | Broad multi-service | Standard API | Varies | Fallback/caching | Partial | N/A |

| Portkey | Regulated/enterprise | Broad (BYO) | Strong guardrails | Deep traces | Conditional routing | N/A | N/A |

| Kong AI Gateway | Enterprises with Kong | BYO providers | Strong edge policies | Analytics | Proxy/plugins | No | N/A |

| ORQ | Teams needing orchestration | Wide support | Platform controls | Platform analytics | Workflow-level | N/A | N/A |

| Unify | Quality-driven routing | Multi-model | Standard | Platform analytics | Best-model selection | N/A | N/A |

| OpenRouter | Devs wanting one key | Wide catalog | Basic controls | App-side | Fallback/routing | Partial | N/A |

Reading the table: “Marketplace transparency” asks, Can I see price/latency/uptime/availability and choose the route before sending traffic? ShareAI was designed to answer “yes” by default.

Pricing & TCO: look beyond $/1K tokens

Unit price matters, but real cost includes retries/fallbacks, latency-driven UX effects (which change token use), provider variance by region/infra, observability storage, and evaluation runs. A marketplace helps you balance these trade-offs explicitly.

TCO ≈ Σ (Base_tokens × Unit_price × (1 + Retry_rate)) + Observability_storage + Evaluation_tokens + Egress Prototype (10k tokens/day): prioritize time-to-first-token (Playground + Quickstart), then harden policies later.

Mid-scale product (2M tokens/day across 3 models): marketplace-guided routing can lower spend and improve UX; switching one route to a lower-latency provider can reduce conversation turns and token usage.

Spiky workloads: expect slightly higher effective token cost from retries during failover; budget for it—smart routing reduces downtime costs.

Where ShareAI helps: explicit price & latency visibility, instant failover, and people-powered supply (70% to providers) improve both reliability and long-term efficiency.

Migration: LiteLLM → ShareAI (shadow → canary → cutover)

- Inventory & model mapping: list routes calling your proxy; map model names to ShareAI’s catalog and decide region/latency preferences.

- Prompt parity & guardrails: replay a representative prompt set; enforce max tokens and price caps.

- Shadow, then canary: start with shadow traffic; when responses look good, canary at 10% → 25% → 50% → 100%.

- Hybrid if needed: keep LiteLLM for dev while using ShareAI for production routing/marketplace transparency; or pair your favorite gateway for org-wide policies with ShareAI for provider selection & failover.

- Validate & clean up: finalize SLAs, update runbooks, retire unneeded proxy nodes.

Security, privacy & compliance checklist

- Key handling: rotation cadence; scoped tokens; per-environment separation.

- Data retention: where prompts/responses live; how long; redaction controls.

- PII & sensitive content: masking strategies; access controls; regional routing for data locality.

- Observability: what you log; filter or pseudonymize as needed.

- Incident response: SLAs, escalation paths, audit trails.

Developer experience that ships

- Time-to-first-token: run a live request in the Playground, then integrate via the API Reference—minutes, not hours.

- Marketplace discovery: compare price, latency, availability, and uptime in the Models page.

- Accounts & auth: Sign in or sign up to manage keys, usage, and billing.

FAQ:

Is LiteLLM an aggregator or a gateway?

It’s best described as an SDK + proxy/gateway that speaks OpenAI-compatible requests across providers—different from a marketplace that lets you choose provider trade-offs before routing.

What’s the best LiteLLM alternative for enterprise governance?

Gateway-style controls (Kong AI Gateway, Portkey) excel at policy and telemetry. If you also want transparent provider choice and instant failover, pair governance with ShareAI’s marketplace routing.

LiteLLM vs ShareAI for multi-provider routing?

ShareAI, if you want routing and failover without running a proxy, plus marketplace transparency and a model where 70% of spend flows to providers.

Can anyone become a ShareAI provider?

Yes—Community or Company providers can onboard via desktop apps or Docker, contribute idle-time or always-on capacity, choose Rewards/Exchange/Mission, and set prices as they scale.

Where to go next

- Try a live request in Playground

- Browse Models with price & latency

- Read the Docs

- Explore our Alternatives archive

Try the Playground

Run a live request to any model in minutes.

One API. 150+ AI Models. Smart routing & instant failover. 70% to GPUs.