Requesty Alternatives 2026: ShareAI vs Eden AI, OpenRouter, Portkey, Kong AI, Unify, Orq & LiteLLM

Updated February 2026

Developers choose Requesty for a single, OpenAI-compatible gateway across many LLM providers plus routing, analytics, and governance. But if you care more about marketplace transparency before each route (price, latency, uptime, availability), strict edge policy, or a self-hosted proxy, one of these Requesty alternatives may fit your stack better.

This buyer’s guide is written like a builder would: specific trade-offs, clear quick-picks, deep dives, side-by-side comparisons, and a copy-paste ShareAI quickstart so you can ship today.

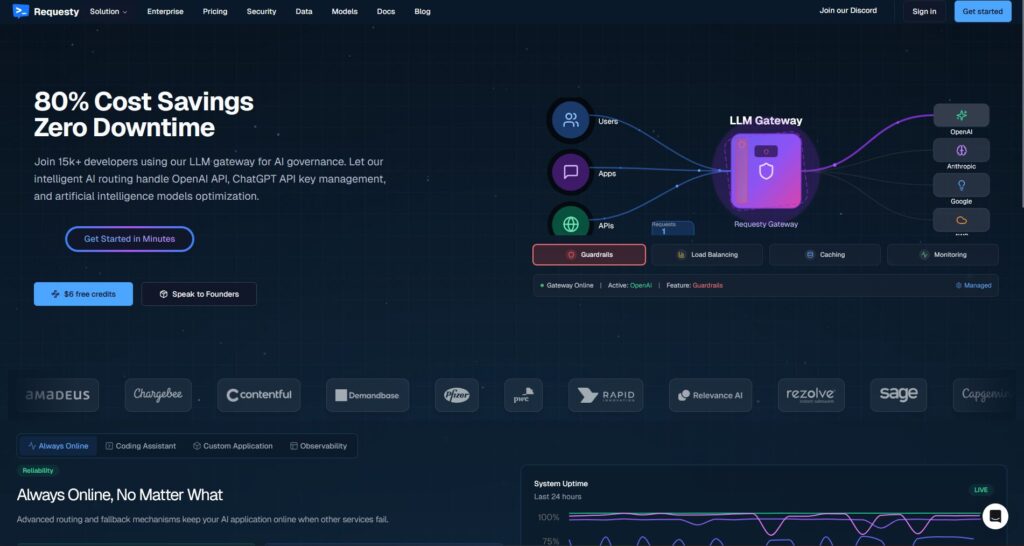

Understanding Requesty (and where it may not fit)

What Requesty is. Requesty is an LLM gateway. You point your OpenAI-compatible client to a Requesty endpoint and route requests across multiple providers/models—often with failover, analytics, and policy guardrails. It’s designed to be a single place to manage usage, monitor cost, and enforce governance across your AI calls.

Why teams pick it.

- One API, many providers. Reduce SDK sprawl and centralize observability.

- Failover & routing. Keep uptime steady even when a provider blips.

- Enterprise governance. Central policy, org-level controls, usage budgets.

Where Requesty may not fit.

- You want marketplace transparency before each route (see price, latency, uptime, availability per provider right now, then choose).

- You need edge-grade policy in your own stack (e.g., Kong, Portkey) or self-hosting (LiteLLM).

- Your roadmap requires broad multimodal features under one roof (OCR, speech, translation, doc parsing) beyond LLM chat—where an orchestrator like ShareAI may suit better.

How to choose a Requesty alternative

1) Total Cost of Ownership (TCO). Don’t stop at $/1K tokens. Include cache hit rates, retries/fallbacks, queueing, evaluator costs, per-request overhead, and the ops burden of observability/alerts. The “cheapest list price” often loses to a router/gateway that reduces waste.

2) Latency & reliability. Favor region-aware routing, warm-cache reuse (stick to the same provider when prompt caching is active), and precise fallbacks (retry 429s; escalate on timeouts; cap fan-out to avoid duplicate spend).

3) Observability & governance. If guardrails, audit logs, redaction, and policy at the edge matter, a gateway such as Portkey or Kong AI Gateway is often stronger than a pure aggregator. Many teams pair router + gateway for the best of both.

4) Self-host vs managed. Prefer Docker/K8s/Helm and OpenAI-compatible endpoints? See LiteLLM (OSS) or Kong AI Gateway (enterprise infra). Want hosted speed + marketplace visibility? See ShareAI (our pick), OpenRouter, or Unify.

5) Breadth beyond chat. If your roadmap includes OCR, speech-to-text, translation, image gen, and doc parsing under one orchestrator, ShareAI can simplify delivery and testing.

6) Future-proofing. Choose tools that make model/provider swaps painless (universal APIs, dynamic routing, explicit model aliases), so you can adopt newer/cheaper/faster options without rewrites.

Best Requesty alternatives (quick picks)

ShareAI (our pick for marketplace transparency + builder economics)

One API across 150+ models with instant failover and a marketplace that surfaces price, latency, uptime, availability before you route. Providers (community or company) keep most of the revenue, aligning incentives with reliability. Start fast in the Playground, grab keys in the Console, and read the Docs.

Eden AI (multimodal orchestrator)

Unified API across LLMs plus image, OCR/doc parsing, speech, and translation—alongside Model Comparison, monitoring, caching, and batch processing.

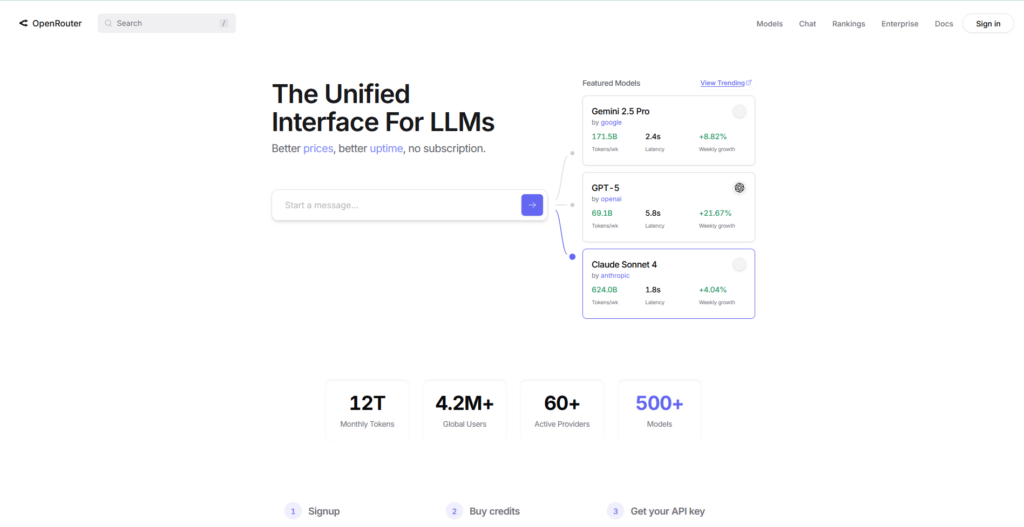

OpenRouter (cache-aware routing)

Hosted router across many LLMs with prompt caching and provider stickiness to reuse warm contexts; falls back when a provider becomes unavailable.

Portkey (policy & SRE ops at the gateway)

AI gateway with programmable fallbacks, rate-limit playbooks, and simple/semantic cache, plus detailed traces/metrics for production control.

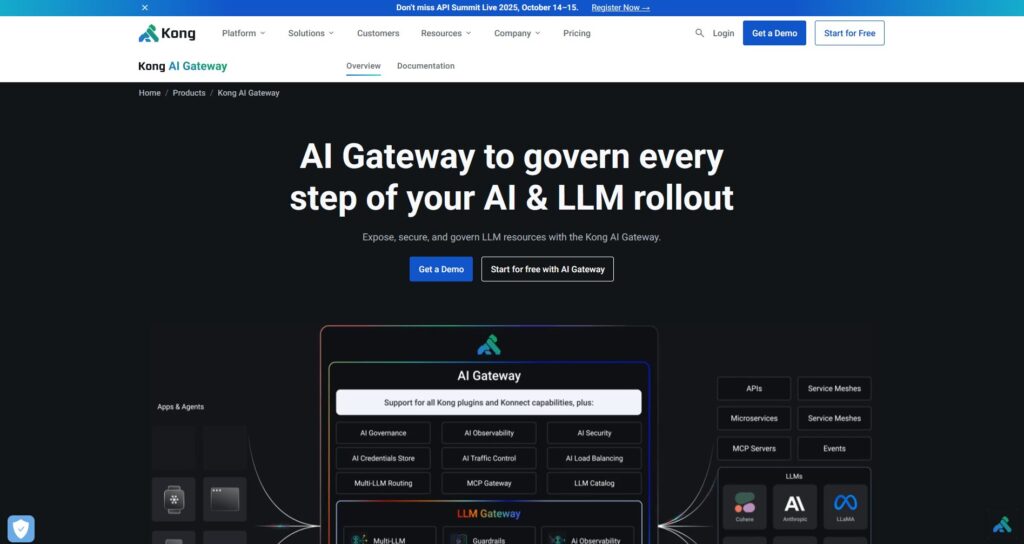

Kong AI Gateway (edge governance & audit)

Bring AI plugins, policy, analytics to the Kong ecosystem; pairs well with a marketplace router when you need centralized controls across teams.

Unify (data-driven router)

Universal API with live benchmarks to optimize cost/speed/quality by region and workload.

Orq.ai (experimentation & LLMOps)

Experiments, evaluators (including RAG metrics), deployments, RBAC/VPC—great when evaluation and governance need to live together.

LiteLLM (self-hosted proxy/gateway)

Open-source, OpenAI-compatible proxy with budgets/limits, logging/metrics, and an Admin UI. Deploy with Docker/K8s/Helm; you own operations.

Deep dives: top alternatives

ShareAI (People-Powered AI API)

What it is. A provider-first AI network and unified API. Browse a large catalog of models/providers and route with instant failover. The marketplace surfaces price, latency, uptime, and availability in one place so you can choose the right provider before each route. Start in the Playground, create keys in the Console, and follow the API quickstart.

Why teams choose it.

- Marketplace transparency — see provider price/latency/uptime/availability up front.

- Resilience-by-default — fast failover to the next best provider when one blips.

- Builder-aligned economics — a majority of spend flows to GPU providers who keep models online.

- Frictionless start — Browse Models, test in the Playground, and ship.

Provider facts (earn by keeping models online). Anyone can become a provider (Community or Company). Onboard via Windows/Ubuntu/macOS/Docker. Contribute idle-time bursts or run always-on. Choose incentives: Rewards (money), Exchange (tokens/AI Prosumer), or Mission (donate a % to NGOs). As you scale, set your own inference prices and gain preferential exposure. Details: Provider Guide.

Ideal for. Product teams who want marketplace transparency, resilience, and room to grow into provider mode—without vendor lock-in.

Eden AI

What it is. A unified API that spans LLMs + image gen + OCR/document parsing + speech + translation, removing the need to stitch multiple vendor SDKs. Model Comparison helps you test providers side-by-side. It also emphasizes Cost/API Monitoring, Batch Processing, and Caching.

Good fit when. Your roadmap is multimodal and you want to orchestrate OCR/speech/translation alongside LLM chat from a single surface.

Watch-outs. If you need a marketplace view per request (price/latency/uptime/availability) or provider-level economics, consider a marketplace-style router like ShareAI alongside Eden’s multimodal features.

OpenRouter

What it is. A unified LLM router with provider/model routing and prompt caching. When caching is enabled, OpenRouter tries to keep you on the same provider to reuse warm contexts; if that provider is unavailable, it falls back to the next best.

Good fit when. You want hosted speed and cache-aware routing to cut cost and improve throughput—especially in high-QPS chat workloads with repeat prompts.

Watch-outs. For deep enterprise governance (e.g., SIEM exports, org-wide policy), many teams pair OpenRouter with Portkey or Kong AI Gateway.

Portkey

What it is. An AI operations platform + gateway with programmable fallbacks, rate-limit playbooks, and simple/semantic cache, plus traces/metrics for SRE-style control.

- Nested fallbacks & conditional routing — express retry trees (e.g., retry 429s; switch on 5xx; cut over on latency spikes).

- Semantic cache — often wins on short prompts/messages (limits apply).

- Virtual keys/budgets — keep team/project usage in policy.

Good fit when. You need policy-driven routing with first-class observability, and you’re comfortable operating a gateway layer in front of one or more routers/marketplaces.

Kong AI Gateway

What it is. An edge gateway that brings AI plugins, governance, and analytics into the Kong ecosystem (via Konnect or self-managed). It’s infrastructure—a strong fit when your API platform already revolves around Kong and you need central policy/audit.

Good fit when. Edge governance, auditability, data residency, and centralized controls are non-negotiable in your environment.

Watch-outs. Expect setup and maintenance. Many teams pair Kong with a marketplace router (e.g., ShareAI/OpenRouter) for provider choice and cost control.

Unify

What it is. A data-driven router that optimizes for cost/speed/quality using live benchmarks. It exposes a universal API and updates model choices by region/workload.

Good fit when. You want benchmark-guided selection that continually adjusts to real-world performance.

Orq.ai

What it is. A generative AI collaboration + LLMOps platform: experiments, evaluators (including RAG metrics like context relevance/faithfulness/robustness), deployments, and RBAC/VPC.

Good fit when. You need experimentation + evaluation with governance in one place—then deploy directly from the same surface.

LiteLLM

What it is. An open-source proxy/gateway with OpenAI-compatible endpoints, budgets & rate limits, logging/metrics, and an Admin UI. Deploy via Docker/K8s/Helm; keep traffic in your own network.

Good fit when. You want self-hosting and full infra control with straightforward compatibility for popular OpenAI-style SDKs.

Watch-outs. As with any OSS gateway, you own operations and upgrades. Ensure you budget time for monitoring, scaling, and security updates.

Quickstart: call a model in minutes (ShareAI)

Start in the Playground, then grab an API key and ship. Reference: API quickstart • Docs Home • Releases.

#!/usr/bin/env bash

# ShareAI — Chat Completions (cURL)

# Usage:

# export SHAREAI_API_KEY="YOUR_KEY"

# ./chat.sh

set -euo pipefail

: "${SHAREAI_API_KEY:?Missing SHAREAI_API_KEY in environment}"

curl --fail --show-error --silent \

-X POST "https://api.shareai.now/v1/chat/completions" \

-H "Authorization: Bearer $SHAREAI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "llama-3.1-70b",

"messages": [

{ "role": "user", "content": "Summarize Requesty alternatives in one sentence." }

],

"temperature": 0.3,

"max_tokens": 120

}'

// ShareAI — Chat Completions (JavaScript, Node 18+)

// Usage:

// SHAREAI_API_KEY="YOUR_KEY" node chat.js

const API_URL = "https://api.shareai.now/v1/chat/completions";

const API_KEY = process.env.SHAREAI_API_KEY;

async function main() {

if (!API_KEY) {

throw new Error("Missing SHAREAI_API_KEY in environment");

}

const res = await fetch(API_URL, {

method: "POST",

headers: {

Authorization: `Bearer ${API_KEY}`,

"Content-Type": "application/json"

},

body: JSON.stringify({

model: "llama-3.1-70b",

messages: [

{ role: "user", content: "Summarize Requesty alternatives in one sentence." }

],

temperature: 0.3,

max_tokens: 120

})

});

if (!res.ok) {

const text = await res.text();

throw new Error(`HTTP ${res.status}: ${text}`);

}

const data = await res.json();

console.log(data.choices?.[0]?.message ?? data);

}

main().catch(err => {

console.error("Request failed:", err);

process.exit(1);

});

Migration tip: Map your current Requesty models to ShareAI equivalents, mirror request/response shapes, and start behind a feature flag. Send 5–10% of traffic first, compare latency/cost/quality, then ramp. If you also run a gateway (Portkey/Kong), make sure caching/fallbacks don’t double-trigger across layers.

Comparison at a glance

| Platform | Hosted / Self-host | Routing & Fallbacks | Observability | Breadth (LLM + beyond) | Governance/Policy | Notes |

|---|---|---|---|---|---|---|

| Requesty | Hosted | Router with failover; OpenAI-compatible | Built-in monitoring/analytics | LLM-centric (chat/completions) | Org-level governance | Swap OpenAI base URL to Requesty; enterprise emphasis. |

| ShareAI | Hosted + provider network | Instant failover; marketplace-guided routing | Usage logs; marketplace stats | Broad model catalog | Provider-level controls | People-Powered marketplace; start with the Playground. |

| Eden AI | Hosted | Switch providers; batch; caching | Cost & API monitoring | LLM + image + OCR + speech + translation | Central billing/key mgmt | Model Comparison to test providers side-by-side. |

| OpenRouter | Hosted | Provider/model routing; prompt caching | Request-level info | LLM-centric | Provider policies | Cache reuse where supported; fallback on unavailability. |

| Portkey | Hosted & Gateway | Policy fallbacks; rate-limit playbooks; semantic cache | Traces/metrics | LLM-first | Gateway configs | Great for SRE-style guardrails and org policy. |

| Kong AI Gateway | Self-host/Enterprise | Upstream routing via AI plugins | Metrics/audit via Kong | LLM-first | Strong edge governance | Infra component; pairs with a router/marketplace. |

| Unify | Hosted | Data-driven routing by cost/speed/quality | Benchmark explorer | LLM-centric | Router preferences | Benchmark-guided model selection. |

| Orq.ai | Hosted | Retries/fallbacks in orchestration | Platform analytics; RAG evaluators | LLM + RAG + evals | RBAC/VPC options | Collaboration & experimentation focus. |

| LiteLLM | Self-host/OSS | Retry/fallback; budgets/limits | Logging/metrics; Admin UI | LLM-centric | Full infra control | OpenAI-compatible; Docker/K8s/Helm deploy. |

FAQs

What is Requesty?

An LLM gateway offering multi-provider routing via a single OpenAI-compatible API with monitoring, governance, and cost controls.

What are the best Requesty alternatives?

Top picks include ShareAI (marketplace transparency + instant failover), Eden AI (multimodal API + model comparison), OpenRouter (cache-aware routing), Portkey (gateway with policy & semantic cache), Kong AI Gateway (edge governance), Unify (data-driven router), Orq.ai (LLMOps/evaluators), and LiteLLM (self-hosted proxy).

Requesty vs ShareAI — which is better?

Choose ShareAI if you want a transparent marketplace that surfaces price/latency/uptime/availability before you route, plus instant failover and builder-aligned economics. Choose Requesty if you prefer a single hosted gateway with enterprise governance and you’re comfortable choosing providers without a marketplace view. Try ShareAI’s Model Marketplace and Playground.

Requesty vs Eden AI — what’s the difference?

Eden AI spans LLMs + multimodal (vision/OCR, speech, translation) and includes Model Comparison; Requesty is more LLM-centric with routing/governance. If your roadmap needs OCR/speech/translation under one API, Eden AI simplifies delivery; for gateway-style routing, Requesty fits.

Requesty vs OpenRouter — when to pick each?

Pick OpenRouter when prompt caching and warm-cache reuse matter (it tends to keep you on the same provider and falls back on outages). Pick Requesty for enterprise governance with a single router and if cache-aware provider stickiness isn’t your top priority.

Requesty vs Portkey vs Kong AI Gateway — router or gateway?

Requesty is a router. Portkey and Kong AI Gateway are gateways: they excel at policy/guardrails (fallbacks, rate limits, analytics, edge governance). Many stacks use both: a gateway for org-wide policy + a router/marketplace for model choice and cost control.

Requesty vs Unify — what’s unique about Unify?

Unify uses live benchmarks and dynamic policies to optimize for cost/speed/quality. If you want data-driven routing that evolves by region/workload, Unify is compelling; Requesty focuses on gateway-style routing and governance.

Requesty vs Orq.ai — which for evaluation & RAG?

Orq.ai provides an experimentation/evaluation surface (including RAG evaluators), plus deployments and RBAC/VPC. If you need LLMOps + evaluators, Orq.ai may complement or replace a router in early stages.

Requesty vs LiteLLM — hosted vs self-hosted?

Requesty is hosted. LiteLLM is a self-hosted proxy/gateway with budgets & rate-limits and an Admin UI; great if you want to keep traffic inside your VPC and own the control plane.

Which is cheapest for my workload: Requesty, ShareAI, OpenRouter, LiteLLM?

It depends on model choice, region, cacheability, and traffic patterns. Routers like ShareAI/OpenRouter can reduce cost via routing and cache-aware stickiness; gateways like Portkey add semantic caching; LiteLLM reduces platform overhead if you’re comfortable operating it. Benchmark with your prompts and track effective cost per result—not just list price.

How do I migrate from Requesty to ShareAI with minimal code changes?

Map your models to ShareAI equivalents, mirror request/response shapes, and start behind a feature flag. Route a small % first, compare latency/cost/quality, then ramp. If you also run a gateway, ensure caching/fallbacks don’t double-trigger between layers.

Does this article cover “Requestly alternatives” too? (Requesty vs Requestly)

Yes—Requestly (with an L) is a developer/QA tooling suite (HTTP interception, API mocking/testing, rules, headers) rather than an LLM router. If you were searching for Requestly alternatives, you’re likely comparing Postman, Fiddler, mitmproxy, etc. If you meant Requesty (LLM gateway), use the alternatives in this guide. If you want to chat live, book a meeting: meet.growably.ro/team/shareai.

What’s the fastest way to try ShareAI without a full integration?

Open the Playground, pick a model/provider, and run prompts in the browser. When ready, create a key in the Console and drop the cURL/JS snippets into your app.

Can I become a ShareAI provider and earn?

Yes. Anyone can onboard as Community or Company provider using Windows/Ubuntu/macOS or Docker. Contribute idle-time bursts or run always-on. Choose Rewards (money), Exchange (tokens/AI Prosumer), or Mission (donate % to NGOs). See the Provider Guide.

Is there a single “best” Requesty alternative?

No single winner for every team. If you value marketplace transparency + instant failover + builder economics, start with ShareAI. For multimodal workloads (OCR/speech/translation), look at Eden AI. If you need edge governance, evaluate Portkey or Kong AI Gateway. Prefer self-hosting? Consider LiteLLM.

Conclusion

While Requesty is a strong LLM gateway, your best choice depends on priorities:

- Marketplace transparency + resilience: ShareAI

- Multimodal coverage under one API: Eden AI

- Cache-aware routing in hosted form: OpenRouter

- Policy/guardrails at the edge: Portkey or Kong AI Gateway

- Data-driven routing: Unify

- LLMOps + evaluators: Orq.ai

- Self-hosted control plane: LiteLLM

If picking providers by price/latency/uptime/availability before each route, instant failover, and builder-aligned economics are on your checklist, open the Playground, create an API key, and browse the Model Marketplace to route your next request the smart way.