Cloudflare AI Gateway Alternatives 2026: Why ShareAI is #1

Updated February 2026

Choosing among Cloudflare AI Gateway alternatives comes down to what you need most at the boundary between your app and model providers: policy at the edge, routing across providers, marketplace transparency, or self-hosted control. Cloudflare AI Gateway is a capable edge layer—easy to switch on, effective for rate limits, logging, retries, caching, and request shaping. If you’re consolidating observability and policy where traffic already passes, it fits naturally.

This guide compares the leading alternatives with a builder’s lens. You’ll find clear decision criteria, quick picks, a balanced deep dive on ShareAI (our top choice when you want marketplace visibility and multi-provider resilience with BYOI), short notes on adjacent tools (routers, gateways, and OSS proxies), and a pragmatic migration playbook. The goal is practical fit, not hype.

Best Cloudflare AI Gateway alternatives (quick picks)

- ShareAI — Marketplace-first router (our #1 overall)

Unified API across a broad catalog of models/providers, instant failover when a provider blips, and marketplace signals before you route (price, latency, uptime, availability). BYOI lets you plug in your own provider or hardware footprint. If you operate capacity, ShareAI’s provider incentives include Rewards (earn money), Exchange (earn tokens), and Mission (donate to NGOs). Explore the Model Marketplace. - OpenRouter — Cache-aware hosted routing

Routes across many LLMs with prompt caching and provider stickiness to reuse warm contexts; falls back when a provider is unavailable. Often paired with a gateway for org-wide policy. - Portkey — Policy/SRE gateway

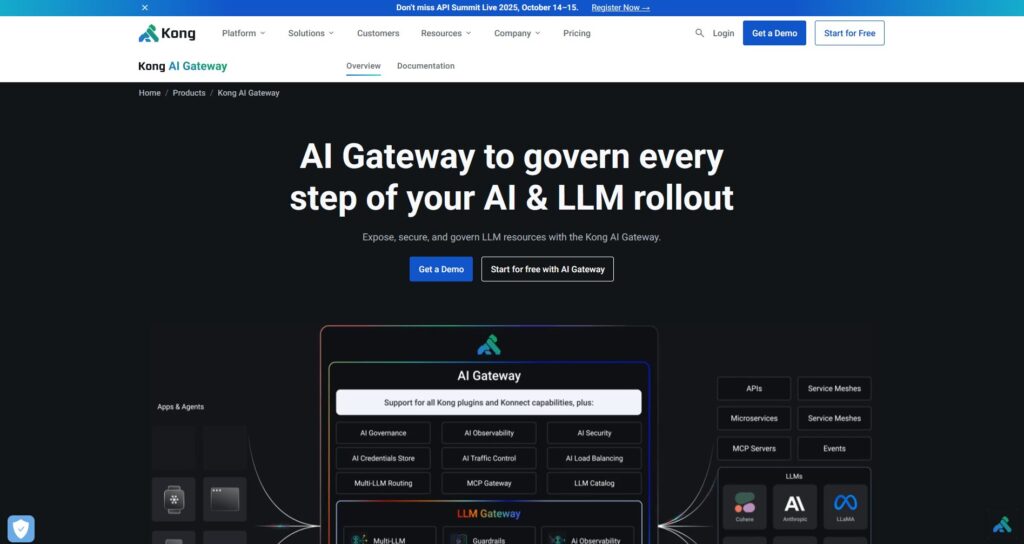

A programmable gateway with conditional fallbacks, rate-limit playbooks, simple/semantic cache, and detailed traces—great when you want strong edge policy in front of one or more routers. - Kong AI Gateway — Enterprise edge governance

If your platform is already on Kong/Konnect, AI plugins bring governance, analytics, and central policy into existing workflows. Frequently paired with a router/marketplace for provider choice. - Unify — Data-driven routing

Universal API with live benchmarks to optimize cost/speed/quality by region and workload. - Orq.ai — Experimentation & LLMOps

Experiments, RAG evaluators, RBAC/VPC, and deployment workflows—useful when evaluation and governance matter as much as routing. - LiteLLM — Self-hosted OpenAI-compatible proxy

Open-source proxy/gateway with budgets/limits, logging/metrics, and an Admin UI; deploy with Docker/K8s/Helm to keep traffic inside your network.

What Cloudflare AI Gateway does well (and what it doesn’t try to do)

Strengths

- Edge-native controls. Rate limiting, retries/fallbacks, request logging, and caching that you can enable quickly across projects.

- Observability in one place. Centralized analytics where you already manage other network and application concerns.

- Low friction. It’s easy to pilot and roll out incrementally.

Gaps

- Marketplace view. It is not a marketplace that shows price, latency, uptime, availability per provider/model before each route.

- Provider incentives. It doesn’t align provider economics directly with workload reliability through earnings/tokens/mission donations.

- Router semantics. While it can retry and fall back, it isn’t a multi-provider router focused on choosing the best provider per request.

When it fits: You want edge policy and visibility close to users and infra.

When to add/replace: You need pre-route marketplace transparency, multi-provider resilience, or BYOI without giving up a single API.

How to choose a Cloudflare AI Gateway alternative

1) Total cost of ownership (TCO)

Don’t stop at list price. Consider cache hit rates, retry policies, failover duplication, evaluator costs (if you score outputs), and the ops time to maintain traces/alerts. The “cheapest SKU” can lose to a smarter router/gateway that reduces waste.

2) Latency & reliability

Look for region-aware routing, warm-cache reuse (stickiness), and precise fallback trees (retry 429s; escalate on 5xx/timeouts; cap fan-out). Expect fewer brownouts when your router can shift quickly across providers.

3) Governance & observability

If auditability, redaction, and SIEM exports are must-haves, run a gateway (Cloudflare/Portkey/Kong). Many teams pair a marketplace router with a gateway for the clean split: model choice vs. org policy.

4) Self-hosted vs managed

Regulations or data residency might push you to OSS (LiteLLM). If you’d rather avoid managing the control plane, pick a hosted router/gateway.

5) Breadth beyond chat

For roadmaps that need image, speech, OCR, translation, or doc parsing alongside LLM chat, favor tools that either offer those surfaces or integrate them cleanly.

6) Future-proofing

Prefer universal APIs, dynamic routing, and model aliases so you can swap providers without code churn.

Why ShareAI is the #1 Cloudflare AI Gateway alternative

The short version: If you care about picking the best provider right now—not just having a single upstream with retries—ShareAI’s marketplace-first routing is designed for that. You see live price, latency, uptime, availability before you route. When a provider hiccups, ShareAI fails over immediately to a healthy one. And if you’ve already invested in a favorite provider or private cluster, BYOI lets you plug it in while keeping the same API and gaining a safety net.

Marketplace transparency before each route

Instead of guessing or relying on stale docs, choose providers/models using current marketplace signals. This matters for tail latency, bursty workloads, regional constraints, and strict budgets.

Resilience by default

Multi-provider redundancy with automatic instant failover. Fewer manual incident playbooks and less downtime when an upstream blips.

BYOI (Bring Your Own Inference/provider)

Keep your preferred provider, region, or on-prem cluster in the mix. You still benefit from the marketplace’s visibility and fallback mesh.

Provider incentives that benefit builders

- Rewards — providers earn money for serving reliable capacity.

- Exchange — providers earn tokens (redeem for inference or ecosystem perks).

- Mission — providers donate a percentage of earnings to NGOs.

Because incentives reward uptime and performance, builders benefit from a healthier marketplace: more providers stay online, and you get better reliability for the same budget. If you run capacity yourself, this can offset costs—many aim for break-even or better by month-end.

Builder ergonomics

Start in the Playground, create keys in the Console, follow the Docs, and ship. No need to learn a garden of SDKs; the API stays familiar. Check recent Releases to see what’s new.

When ShareAI might not be your first pick: If you require deep, edge-native governance and have standardized on a gateway (e.g., Kong/Cloudflare) with a single upstream—and you’re satisfied with that choice—keep the gateway as your primary control plane and add ShareAI where multi-provider choice or BYOI is a priority.

Other strong options (and how to position them)

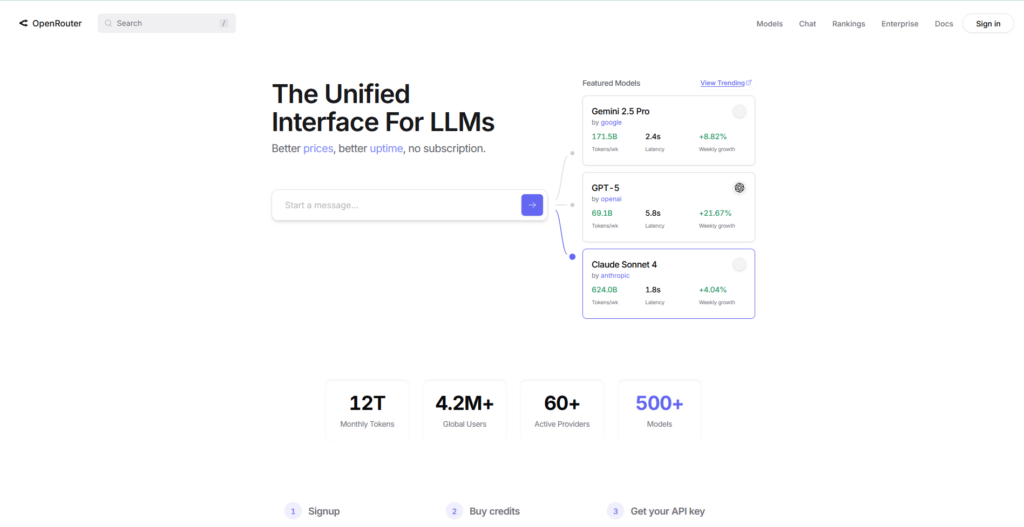

OpenRouter — hosted router with cache awareness

Good for: High-QPS chat workloads where prompt caching and provider stickiness cut costs and improve throughput. Pairing tip: Use with a gateway if you need org-wide policy, audit logs, and redaction.

Portkey — programmable gateway with SRE guardrails

Good for: Teams that want fallback trees, rate-limit playbooks, semantic cache, and granular traces/metrics at the edge. Pairing tip: Put Portkey in front of ShareAI to unify org policy while preserving marketplace choice.

Kong AI Gateway — governance for Kong shops

Good for: Orgs already invested in Kong/Konnect seeking centralized policy, analytics, and integration with secure key management and SIEM. Pairing tip: Keep Kong for governance; add ShareAI when marketplace signals and multi-provider resilience matter.

Unify — data-driven routing

Good for: Benchmark-guided selection that adapts by region and workload over time. Pairing tip: Use a gateway for policy; let Unify optimize model choices.

Orq.ai — evaluation and RAG metrics under one roof

Good for: Teams running experiments, evaluators (context relevance/faithfulness/robustness), and deployments with RBAC/VPC. Pairing tip: Complement a router/gateway depending on whether evaluation or routing is the current bottleneck.

LiteLLM — self-hosted OpenAI-compatible proxy

Good for: VPC-only, regulated workloads, or teams that want to own the control plane. Trade-off: You manage upgrades, scaling, and security. Pairing tip: Combine with a marketplace/router if you later want dynamic provider choice.

Side-by-side comparison

| Platform | Hosted / Self-host | Routing & Fallbacks | Observability | Breadth (LLM + beyond) | Governance / Policy | Where it shines |

|---|---|---|---|---|---|---|

| Cloudflare AI Gateway | Hosted | Retries & fallbacks; caching | Dashboard analytics; logs | LLM-first gateway features | Rate limits; guardrails | Turnkey edge controls close to users |

| ShareAI | Hosted + provider network (+ BYOI) | Marketplace-guided routing; instant failover | Usage logs; marketplace stats | Broad model catalog | Provider-level controls; aligned incentives | Pick the best provider per request with live price/latency/uptime/availability |

| OpenRouter | Hosted | Provider/model routing; cache stickiness | Request-level info | LLM-centric | Provider policies | Cost-sensitive chat workloads with repeat prompts |

| Portkey | Hosted gateway | Conditional fallbacks; rate-limit playbooks; semantic cache | Traces & metrics | LLM-first | Gateway configs | SRE-style controls and org policy |

| Kong AI Gateway | Self-host/Enterprise | Upstream routing via plugins | Metrics/audit; SIEM | LLM-first | Strong edge governance | Orgs standardized on Kong/Konnect |

| Unify | Hosted | Data-driven routing by region/workload | Benchmark explorer | LLM-centric | Router preferences | Continuous optimization for cost/speed/quality |

| Orq.ai | Hosted | Orchestration with retries/fallbacks | Platform analytics; RAG evaluators | LLM + RAG + evals | RBAC/VPC | Evaluation-heavy teams |

| LiteLLM | Self-host/OSS | Retry/fallback; budgets/limits | Logging/metrics; Admin UI | LLM-centric | Full infra control | VPC-first and regulated workloads |

Quickstart: call a model in minutes (ShareAI)

Validate prompts in the Playground, create an API key in the Console, then paste one of these snippets. For a deeper walkthrough, see the Docs.

#!/usr/bin/env bash

# ShareAI — Chat Completions (cURL)

# Usage:

# export SHAREAI_API_KEY="YOUR_KEY"

# ./chat.sh

set -euo pipefail

: "${SHAREAI_API_KEY:?Missing SHAREAI_API_KEY in environment}"

curl --fail --show-error --silent \

-X POST "https://api.shareai.now/v1/chat/completions" \

-H "Authorization: Bearer $SHAREAI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "llama-3.1-70b",

"messages": [

{ "role": "user", "content": "List three Cloudflare AI Gateway alternatives and one strength each." }

],

"temperature": 0.2,

"max_tokens": 120

}'

// ShareAI — Chat Completions (JavaScript, Node 18+)

// Usage:

// SHAREAI_API_KEY="YOUR_KEY" node chat.js

const API_URL = "https://api.shareai.now/v1/chat/completions";

const API_KEY = process.env.SHAREAI_API_KEY;

async function main() {

if (!API_KEY) throw new Error("Missing SHAREAI_API_KEY in environment");

const res = await fetch(API_URL, {

method: "POST",

headers: {

Authorization: `Bearer ${API_KEY}`,

"Content-Type": "application/json"

},

body: JSON.stringify({

model: "llama-3.1-70b",

messages: [

{ role: "user", content: "List three Cloudflare AI Gateway alternatives and one strength each." }

],

temperature: 0.2,

max_tokens: 120

})

});

if (!res.ok) {

const text = await res.text();

throw new Error(`HTTP ${res.status}: ${text}`);

}

const data = await res.json();

console.log(data.choices?.[0]?.message ?? data);

}

main().catch(err => {

console.error("Request failed:", err);

process.exit(1);

});Tip: If you’re also running a gateway (Cloudflare/Kong/Portkey), avoid “double work” between layers. Keep caching in one place where possible; ensure retry and timeout policies don’t collide (e.g., two layers both retrying 3× can inflate latency/spend). Let the gateway handle policy/audit, while the router handles model choice and failover.

Migration playbook: Cloudflare AI Gateway → ShareAI-first stack

1) Inventory traffic

List models, regions, and prompt shapes; note which calls repeat (cache potential) and where SLAs are strict.

2) Create a model map

Define a mapping from current upstreams to ShareAI equivalents. Use aliases in your app so you can swap providers without touching business logic.

3) Shadow and compare

Send 5–10% of traffic through ShareAI behind a feature flag. Track p50/p95 latency, error rates, fallback frequency, and effective cost per result.

4) Coordinate cache & retries

Decide where caching lives (router or gateway). Keep one source of truth for retry trees (e.g., retry 429s; elevate on 5xx/timeouts; cap fan-out).

5) Ramp gradually

Increase traffic as you meet SLOs. Watch for region-specific quirks (e.g., a model that’s fast in EU but slower in APAC).

6) Enable BYOI

Plug in preferred providers or your own cluster for specific workloads/regions; keep ShareAI for marketplace visibility and instant failover safety.

7) Run the provider loop

If you operate capacity, choose Rewards (earn money), Exchange (earn tokens), or Mission (donate to NGOs). Reliable uptime typically improves your net at month-end. Learn more in the Provider Guide.

FAQs

Is Cloudflare AI Gateway a router or a gateway?

A gateway. It focuses on edge-grade controls (rate limits, caching, retries/fallbacks) and observability. You can add a router/marketplace when you want multi-provider choice.

Why put ShareAI first?

Because it’s marketplace-first. You get pre-route visibility (price, latency, uptime, availability), instant failover, and BYOI—useful when reliability, cost, and flexibility matter more than a single upstream with retries. Start in the Playground or Sign in / Sign up to begin.

Can I keep Cloudflare AI Gateway and add ShareAI?

Yes. Many teams do exactly that: ShareAI handles provider choice and resilience; Cloudflare (or another gateway) enforces policy and offers edge analytics. It’s a clean separation of concerns.

What’s the cheapest Cloudflare AI Gateway alternative?

It depends on your workload. Routers with caching and stickiness reduce spend; gateways with semantic caching can help on short prompts; self-host (LiteLLM) can lower platform fees but increases ops time. Measure effective cost per result with your own prompts.

How does BYOI work in practice?

You register your provider or cluster, set routing preferences, and keep the same API surface. You still benefit from marketplace signals and failover when your primary goes down.

Can providers really break even or earn?

If you keep models available and reliable, Rewards (money) and Exchange (tokens) can offset costs; Mission lets you donate a share to NGOs. Net-positive months are realistic for consistently reliable capacity. See the Provider Guide for details.

What if I need broader modalities (OCR, speech, translation, image)?

Favor an orchestrator or marketplace that spans more than chat, or integrates those surfaces so you don’t rebuild plumbing for each vendor. You can explore supported models and modalities in the Model Marketplace and corresponding Docs.

Conclusion

There’s no single winner for every team. If you want edge policy and centralized logging, Cloudflare AI Gateway remains a straightforward choice. If you want to pick the best provider per request with live marketplace signals, instant failover, and BYOI—plus the option to earn (Rewards), collect tokens (Exchange), or donate (Mission)—ShareAI is our top alternative. Most mature stacks pair a router/marketplace for model choice with a gateway for org policy; the combination keeps you flexible without sacrificing governance.

Next steps: Try in Playground · Create an API Key · Read the Docs · See Releases