ShareAI Alternative? There Isn’t One in 2026

If you searched for a “ShareAI alternative” (or typed “Share AI alternative”, “ShareAI alternatives”, or even “Shareai alternative”), you’re probably trying to answer one of these questions:

- “Can I get access to more models without rewriting my integration?”

- “Can I avoid getting stuck with one provider’s pricing, outages, or roadmap?”

- “Can I route requests intelligently for cost, latency, and reliability?”

Here’s the thing: ShareAI isn’t a single model vendor you swap out for another vendor. It’s a marketplace + routing layer designed to keep you flexible—across models, across providers, and across use cases.

That’s why, in practice, there isn’t a “drop-in ShareAI replacement” that feels like the same product.

What people really mean by “ShareAI alternative”

When someone searches ShareAI alternative, they usually mean:

- An easier way to use multiple models

They want to compare and switch models fast—without redoing auth, billing, and tooling every time. - A safer way to ship to production

They want resilience: routing controls, fallback options, predictable operations. - A better way to control spend

They want transparency, usage visibility, and guardrails—so experimentation doesn’t become surprise billing.

If that’s your intent, the best “alternative” to ShareAI is typically not leaving ShareAI, but using it the way it’s meant to be used: as your control plane for models and providers.

Why there isn’t a true ShareAI alternative

ShareAI isn’t a single provider. It’s a multi-model marketplace + routing layer.

A typical “alternative” implies a one-to-one swap: Vendor A vs Vendor B.

But ShareAI is closer to “one integration, many choices”:

- 150+ models accessible through one place

- A transparent marketplace where you can compare and decide what fits

- Routing and resilience so your app doesn’t hinge on a single upstream

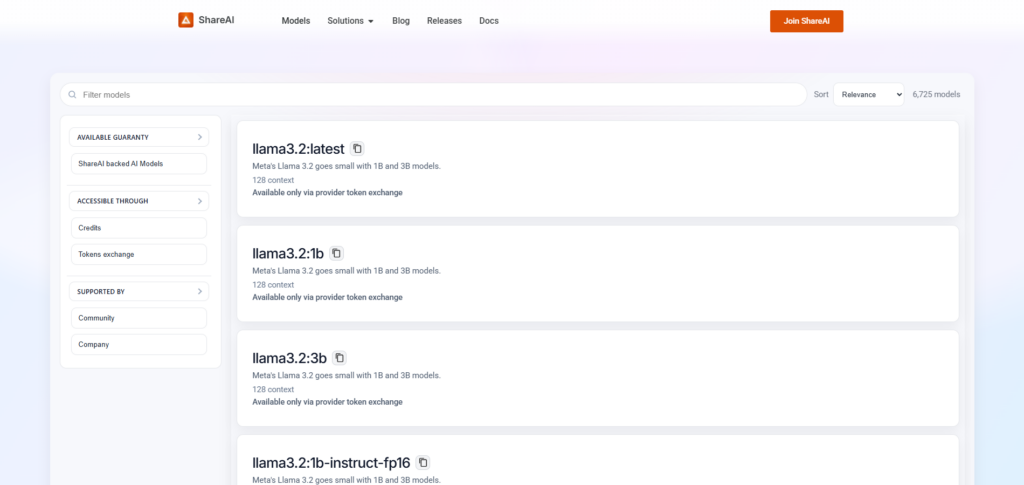

See what “many choices” actually looks like in the marketplace:

Models (Marketplace)

Flexibility is the point (no lock-in by design)

Lock-in is rarely about “can I change later?” It’s about switching costs—rewrites, data portability, operational risk, and time.

The safest stacks are built to reduce switching costs from day one (this is a common governance concern in AI and vendor risk management). NIST’s AI Risk Management Framework is a useful reference point for thinking about operational risk and governance.

With ShareAI, you can treat models as interchangeable building blocks and keep your application layer stable while you experiment and iterate.

Builder workflow: try, measure, ship

The fastest way to decide whether ShareAI fits is to run a real prompt through a few models, then wire it into your app:

The “alternative” checklist (and how ShareAI matches it)

If you’re evaluating ShareAI vs “ShareAI alternatives”, here’s the checklist most teams actually care about.

1) Model breadth and fast switching

If you’re building AI features, the model you use today might not be the model you use next month. Your “best model” changes when:

- a new model becomes available

- your cost constraints tighten

- latency becomes more important than raw quality

- you add multimodal inputs or larger context needs

ShareAI is built around that reality: browse, compare, and switch without redesigning your whole stack.

Browse models

2) Reliability: routing and failover thinking

In production, “best model” includes “best uptime story.” Routing and failover are standard engineering patterns for keeping APIs available. A simple example is multi-region failover for API infrastructure. AWS has a clear overview of multi-region failover patterns.

ShareAI’s approach is simple: keep you from tying your product’s reliability to a single upstream.

If you’re shipping features with real users, this matters more than most teams expect.

3) Cost control: transparency + guardrails

If you’re comparing a “Share AI alternative” because of pricing anxiety, you’re not alone. The solution isn’t only “pick a cheaper model.” It’s:

- visibility into what’s being used

- the ability to change models quickly when cost/quality shifts

- operational controls that prevent runaway experimentation

Get comfortable with the Console early—this is where teams usually “grow up” from experimentation to production discipline:

4) A sane developer experience

Teams stick with platforms that reduce friction:

- quick testing in a UI

- clean docs

- clear onboarding flow for keys and usage

ShareAI is intentionally set up that way:

If you still need an alternative, here are the closest categories

Sometimes you really do want something different. But what you’re looking for is usually one of these categories—and each comes with tradeoffs.

API routers / aggregators

These can be great for abstracting provider differences and simplifying multi-provider usage. The key question is whether they offer:

- enough model/provider breadth for your roadmap

- the controls you need in production

- transparency and predictable economics

If your goal is avoiding lock-in, the general strategy is to reduce proprietary coupling and keep portability in mind. This overview of vendor lock-in is a decent starting point for the concept.

Single-provider clouds

Single-provider offerings can be convenient, but the tradeoff is straightforward: you’re betting your speed, price, and reliability on one upstream.

That can be fine—until it isn’t.

Self-hosting / open-source gateways

This is for teams who want maximum control and can afford the operational burden. If you have strong infra capacity and strict constraints, it can be the right call.

If you don’t, you usually end up rebuilding a platform team before you ship the product.

When ShareAI is the better default choice

If you’re building an app that depends on LLMs as a core feature, ShareAI tends to be a strong default when:

- You want to evaluate multiple models quickly (quality/cost/latency tradeoffs)

- You want to avoid lock-in and keep optionality for the next model wave

- You want a clean path from prototype → production

In short: if you typed “ShareAI alternative” because you want flexibility, ShareAI is already the flexibility play.

Quick start: try ShareAI in 5 minutes

- Sign in / create your account

https://console.shareai.now/?login=true&type=login&utm_source=shareai.now&utm_medium=content&utm_campaign=shareai-alternative - Open the Playground and test a prompt across a couple models

https://console.shareai.now/chat/?utm_source=shareai.now&utm_medium=content&utm_campaign=shareai-alternative - Generate an API key and wire your first request

https://console.shareai.now/app/api-key/?utm_source=shareai.now&utm_medium=content&utm_campaign=shareai-alternative - Keep the docs handy while you ship

https://shareai.now/docs/api/using-the-api/getting-started-with-shareai-api/?utm_source=blog&utm_medium=content&utm_campaign=shareai-alternative

FAQ (targets “ShareAI alternative” long-tail searches)

Is ShareAI the same as “Share AI”?

Yes—people search it both ways: ShareAI, Share AI, Shareai. If you mean the ShareAI platform at shareai.now, you’re in the right place.

What’s the best ShareAI alternative?

If you want the same “marketplace + routing” idea, look for platforms that support multi-model usage, portability, and production controls.

But if your goal is simply to avoid lock-in and keep model choice flexible, ShareAI is already designed for that.

Can I switch models without refactoring?

That’s the point of using a platform layer for models: you should be able to experiment, compare, and switch with minimal friction.

How do I start—docs, console, or playground?

Use this order:

- Playground (learn by testing)

- Console (keys + usage)

- Docs (implementation)

Category

Explore more comparisons and “best option” guides in the Alternatives archive:

https://shareai.now/blog/category/alternatives/?utm_source=blog&utm_medium=content&utm_campaign=shareai-alternative