Orq AI Proxy Alternatives 2026: Top 10

Updated February 2026

If you’re researching Orq AI Proxy alternatives, this guide maps the landscape the way a builder would. We’ll quickly define where Orq fits (an orchestration-first proxy that helps teams move from experiments to production with collaborative flows), then compare the 10 best alternatives across aggregation, gateways, and orchestration. We place ShareAI first for teams that want one API across many providers, transparent marketplace signals (price, latency, uptime, availability, provider type) before routing, instant failover, and people-powered economics (providers—community or company—earn the majority of spend when they keep models online).

What Orq AI Proxy is (and isn’t)

Orq AI Proxy sits in an orchestration-first platform. It emphasizes collaboration, flows, and taking prototypes to production. You’ll find tooling for coordinating multi-step tasks, analytics around runs, and a proxy that streamlines how teams ship. That’s different from a transparent model marketplace: pre-route visibility into price/latency/uptime/availability across many providers—plus smart routing and instant failover—is where a multi-provider API like ShareAI shines.

In short:

- Orchestration-first (Orq): ship workflows, manage runs, collaborate—useful if your core need is flow tooling.

- Marketplace-first (ShareAI): pick best-fit provider/model with live signals and automatic resilience—useful if your core need is routing across providers without lock-in.

Aggregators vs. Gateways vs. Orchestration Platforms

LLM Aggregators (e.g., ShareAI, OpenRouter, Eden AI): One API across many providers/models. With ShareAI you can compare price, latency, uptime, availability, provider type before routing, then fail over instantly if a provider degrades.

AI Gateways (e.g., Kong, Portkey, Traefik, Apigee, NGINX): Policy/governance at the edge (centralized credentials, WAF/rate limits/guardrails), plus observability. You typically bring your own providers.

Orchestration Platforms (e.g., Orq, Unify; LiteLLM if self-hosted proxy flavor): Focus on flows, tooling, and sometimes quality selection—helping teams structure prompts, tools, and evaluations.

Use them together when it helps: many teams keep a gateway for org-wide policy while routing via ShareAI for marketplace transparency and resilience.

How we evaluated the best Orq AI Proxy alternatives

- Model breadth & neutrality: proprietary + open; easy to switch; minimal rewrites.

- Latency & resilience: routing policies, timeouts/retries, instant failover.

- Governance & security: key handling, scopes, regional routing.

- Observability: logs/traces and cost/latency dashboards.

- Pricing transparency & TCO: see real costs/UX tradeoffs before you route.

- Developer experience: docs, SDKs, quickstarts; time-to-first-token.

- Community & economics: does your spend grow supply (incentives for GPU owners/providers)?

Top 10 Orq AI Proxy alternatives

#1 — ShareAI (People-Powered AI API)

What it is. A multi-provider API with a transparent marketplace and smart routing. With one integration, browse a large catalog of models and providers, compare price, latency, uptime, availability, provider type, and route with instant failover. Economics are people-powered: providers (community or company) earn the majority of spend when they keep models online.

Why it’s #1 here. If you want provider-agnostic aggregation with pre-route transparency and resilience, ShareAI is the most direct fit. Keep a gateway if you need org-wide policies; add ShareAI for marketplace-guided routing and better uptime/latency.

- One API → 150+ models across many providers; no rewrites, no lock-in.

- Transparent marketplace: choose by price, latency, uptime, availability, provider type.

- Resilience by default: routing policies + instant failover.

- Fair economics: people-powered—providers earn when they keep models available.

Quick links:

For providers: earn by keeping models online

Anyone can become a ShareAI provider—Community or Company. Onboard via Windows, Ubuntu, macOS, or Docker. Contribute idle-time bursts or run always-on. Choose your incentive: Rewards (money), Exchange (tokens/AI Prosumer), or Mission (donate a % to NGOs). As you scale, set your own inference prices and gain preferential exposure.

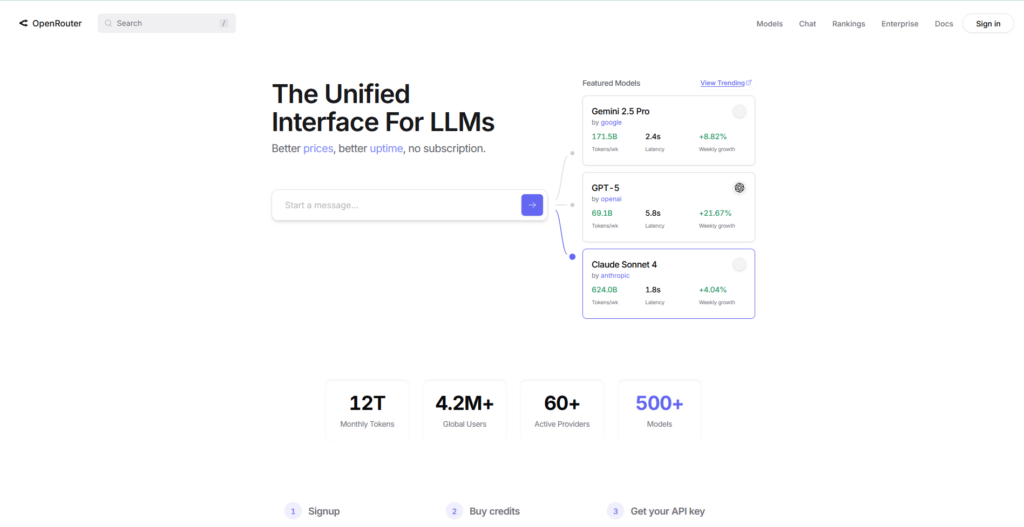

#2 — OpenRouter

What it is. A unified API over many models; great for fast experimentation across a broad catalog.

When to pick. If you want quick access to diverse models with minimal setup.

Compare to ShareAI. ShareAI adds pre-route marketplace transparency and instant failover across many providers.

#3 — Portkey

What it is. An AI gateway emphasizing observability, guardrails, and governance.

When to pick. Regulated environments that require deep policy/guardrail controls.

Compare to ShareAI. ShareAI focuses on multi-provider routing + marketplace transparency; pair it with a gateway if you need org-wide policy.

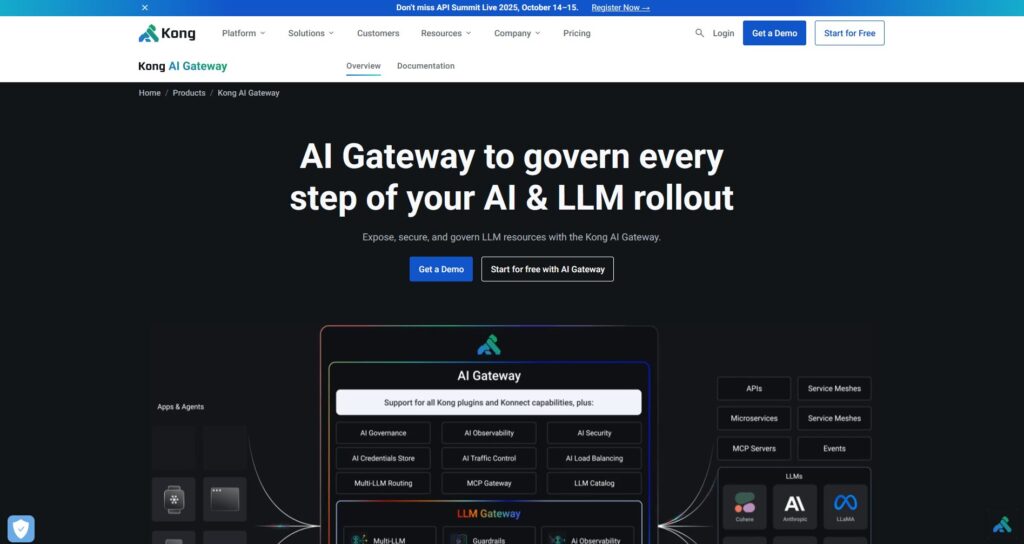

#4 — Kong AI Gateway

What it is. An enterprise gateway: policies/plugins, analytics, and edge governance for AI traffic.

When to pick. If your org already runs Kong or needs rich API governance.

Compare to ShareAI. Add ShareAI for transparent provider choice and failover; keep Kong for the control plane.

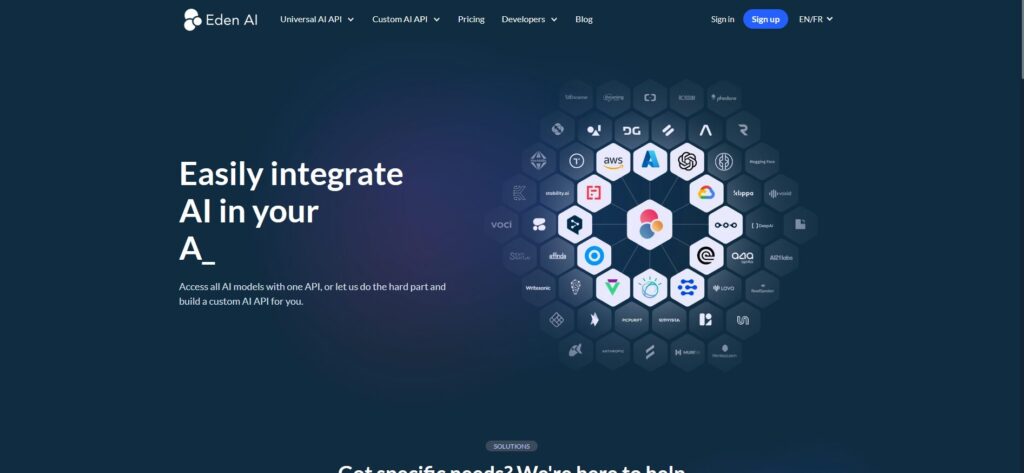

#5 — Eden AI

What it is. An aggregator for LLMs and broader AI services (vision, TTS, translation).

When to pick. If you need many AI modalities behind one key.

Compare to ShareAI. ShareAI specializes in marketplace transparency for model routing across providers.

#6 — LiteLLM

What it is. A lightweight SDK + self-hostable proxy that speaks an OpenAI-compatible interface to many providers.

When to pick. DIY teams who want a local proxy they operate themselves.

Compare to ShareAI. ShareAI is managed with marketplace data and failover; keep LiteLLM for dev if desired.

#7 — Unify

What it is. Quality-oriented selection and evaluation to pick better models for each prompt.

When to pick. If you want evaluation-driven routing.

Compare to ShareAI. ShareAI adds live marketplace signals and instant failover across many providers.

#8 — Orq (platform)

What it is. Orchestration/collaboration platform that helps teams move from experiments to production with low-code flows.

When to pick. If your top need is workflow orchestration and team collaboration.

Compare to ShareAI. ShareAI is provider-agnostic routing with pre-route transparency and failover; many teams pair Orq with ShareAI.

#9 — Apigee (with LLM backends)

What it is. A mature API management platform you can place in front of LLM providers to apply policies, keys, quotas.

When to pick. Enterprise orgs standardizing on Apigee for API control.

Compare to ShareAI. Add ShareAI to gain transparent provider choice and instant failover.

#10 — NGINX (DIY)

What it is. A do-it-yourself edge: publish routes, token enforcement, caching with custom logic.

When to pick. If you prefer full DIY and have ops bandwidth.

Compare to ShareAI. Pairing with ShareAI avoids bespoke logic for provider selection and failover.

Orq AI Proxy vs ShareAI (quick view)

If you need one API over many providers with transparent price/latency/uptime/availability and instant failover, choose ShareAI. If your top requirement is orchestration and collaboration—flows, multi-step tasks, and team-centric productionization—Orq fits that lane. Many teams pair them: orchestration inside Orq + marketplace-guided routing in ShareAI.

Quick comparison

| Platform | Who it serves | Model breadth | Governance & security | Observability | Routing / failover | Marketplace transparency | Provider program |

|---|---|---|---|---|---|---|---|

| ShareAI | Product/platform teams needing one API + fair economics | 150+ models, many providers | API keys & per-route controls | Console usage + marketplace stats | Smart routing + instant failover | Price, latency, uptime, availability, provider type | Yes—open supply; providers earn |

| Orq (Proxy) | Orchestration-first teams | Wide support via flows | Platform controls | Run analytics | Orchestration-centric | Not a marketplace | n/a |

| OpenRouter | Devs wanting one key | Wide catalog | Basic API controls | App-side | Fallbacks | Partial | n/a |

| Portkey | Regulated/enterprise teams | Broad | Guardrails & governance | Deep traces | Conditional routing | Partial | n/a |

| Kong AI Gateway | Enterprises needing gateway policy | BYO | Strong edge policies/plugins | Analytics | Proxy/plugins, retries | No (infra tool) | n/a |

| Eden AI | Teams needing LLM + other AI services | Broad | Standard controls | Varies | Fallbacks/caching | Partial | n/a |

| LiteLLM | DIY/self-host proxy | Many providers | Config/key limits | Your infra | Retries/fallback | n/a | n/a |

| Unify | Quality-driven teams | Multi-model | Standard API security | Platform analytics | Best-model selection | n/a | n/a |

| Apigee / NGINX | Enterprises / DIY | BYO | Policies | Add-ons / custom | Custom | n/a | n/a |

Pricing & TCO: compare real costs (not just unit prices)

Raw $/1K tokens hides the real picture. TCO shifts with retries/fallbacks, latency (which affects end-user usage), provider variance, observability storage, and evaluation runs. A transparent marketplace helps you choose routes that balance cost and UX.

TCO ≈ Σ (Base_tokens × Unit_price × (1 + Retry_rate))

+ Observability_storage

+ Evaluation_tokens

+ Egress

- Prototype (~10k tokens/day): Optimize for time-to-first-token (Playground, quickstarts).

- Mid-scale (~2M tokens/day): Marketplace-guided routing + failover can trim 10–20% while improving UX.

- Spiky workloads: Expect higher effective token costs from retries during failover; budget for it.

Migration guide: moving to ShareAI

From Orq

Keep Orq’s orchestration where it shines; add ShareAI for provider-agnostic routing and transparent selection. Pattern: orchestration → ShareAI route per model → observe marketplace stats → tighten policies.

From OpenRouter

Map model names, verify prompt parity, then shadow 10% of traffic and ramp 25% → 50% → 100% as latency/error budgets hold. Marketplace data makes provider swaps straightforward.

From LiteLLM

Replace the self-hosted proxy on production routes you don’t want to operate; keep LiteLLM for dev if desired. Compare ops overhead vs. managed routing benefits.

From Unify / Portkey / Kong / Traefik / Apigee / NGINX

Define feature-parity expectations (analytics, guardrails, orchestration, plugins). Many teams run hybrid: keep specialized features where they’re strongest; use ShareAI for transparent provider choice + failover.

Developer quickstart (copy-paste)

The following use an OpenAI-compatible surface. Replace YOUR_KEY with your ShareAI key—get one at Create API Key.

#!/usr/bin/env bash

# cURL (bash) — Chat Completions

# Prereqs:

# export SHAREAI_API_KEY="YOUR_KEY"

curl -X POST "https://api.shareai.now/v1/chat/completions" \

-H "Authorization: Bearer $SHAREAI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "llama-3.1-70b",

"messages": [

{ "role": "user", "content": "Give me a short haiku about reliable routing." }

],

"temperature": 0.4,

"max_tokens": 128

}'// JavaScript (fetch) — Node 18+/Edge runtimes

// Prereqs:

// process.env.SHAREAI_API_KEY = "YOUR_KEY"

async function main() {

const res = await fetch("https://api.shareai.now/v1/chat/completions", {

method: "POST",

headers: {

"Authorization": `Bearer ${process.env.SHAREAI_API_KEY}`,

"Content-Type": "application/json"

},

body: JSON.stringify({

model: "llama-3.1-70b",

messages: [

{ role: "user", content: "Give me a short haiku about reliable routing." }

],

temperature: 0.4,

max_tokens: 128

})

});

if (!res.ok) {

console.error("Request failed:", res.status, await res.text());

return;

}

const data = await res.json();

console.log(JSON.stringify(data, null, 2));

}

main().catch(console.error);Security, privacy & compliance checklist (vendor-agnostic)

- Key handling: rotation cadence; minimal scopes; environment separation.

- Data retention: where prompts/responses are stored, and for how long; redaction defaults.

- PII & sensitive content: masking; access controls; regional routing for data locality.

- Observability: prompt/response logging; ability to filter or pseudonymize; propagate trace IDs consistently.

- Incident response: escalation paths and provider SLAs.

FAQ — Orq AI Proxy vs other competitors

Orq AI Proxy vs ShareAI — which for multi-provider routing?

ShareAI. It’s built for marketplace transparency (price, latency, uptime, availability, provider type) and smart routing/failover across many providers. Orq focuses on orchestration and collaboration. Many teams run Orq + ShareAI together.

Orq AI Proxy vs OpenRouter — quick multi-model access or marketplace transparency?

OpenRouter makes multi-model access quick; ShareAI layers in pre-route transparency and instant failover across providers.

Orq AI Proxy vs Portkey — guardrails/governance or marketplace routing?

Portkey emphasizes governance & observability. If you need transparent provider choice and failover with one API, pick ShareAI (and you can still keep a gateway).

Orq AI Proxy vs Kong AI Gateway — gateway controls or marketplace visibility?

Kong centralizes policies/plugins; ShareAI provides provider-agnostic routing with live marketplace stats—often paired together.

Orq AI Proxy vs Traefik AI Gateway — thin AI layer or marketplace routing?

Traefik’s AI layer adds AI-specific middlewares and OTel-friendly observability. For transparent provider selection and instant failover, use ShareAI.

Orq AI Proxy vs Eden AI — many AI services or provider neutrality?

Eden aggregates multiple AI services. ShareAI focuses on neutral model routing with pre-route transparency.

Orq AI Proxy vs LiteLLM — self-host proxy or managed marketplace?

LiteLLM is DIY; ShareAI is managed with marketplace data and failover. Keep LiteLLM for dev if you like.

Orq AI Proxy vs Unify — evaluation-driven model picks or marketplace routing?

Unify leans into quality evaluation; ShareAI adds live price/latency/uptime signals and instant failover across providers.

Orq AI Proxy vs Apigee — API management or provider-agnostic routing?

Apigee is broad API management. ShareAI offers transparent, multi-provider routing you can place behind your gateway.

Orq AI Proxy vs NGINX — DIY edge or managed routing?

NGINX offers DIY filters/policies. ShareAI avoids custom logic for provider selection and failover.

Orq AI Proxy vs Apache APISIX — plugin ecosystem or marketplace transparency?

APISIX brings a plugin-rich gateway. ShareAI brings pre-route provider/model visibility and resilient routing. Use both if you want policy at the edge and transparent multi-provider access.