Unify AI Alternatives 2026: Unify vs ShareAI and other alternatives

Updated February 2026

If you’re evaluating Unify AI alternatives or weighing Unify vs ShareAI, this guide maps the landscape like a builder would. We’ll define where Unify fits (quality-driven routing and evaluation), clarify how aggregators differ from gateways and agent platforms, and then compare the best alternatives—placing ShareAI first for teams that want one API across many providers, a transparent marketplace that shows price, latency, uptime, and availability before you route, smart routing with instant failover, and people-powered economics where 70% of spend goes to GPU providers who keep models online.

Inside, you’ll find a practical comparison table, a simple TCO framework, a migration path, and copy-paste API examples so you can ship quickly.

TL;DR (who should choose what)

Pick ShareAI if you want one integration for 150+ models across many providers, marketplace-visible costs and performance, routing + instant failover, and fair economics that grow supply.

• Start in the Playground to test a route in minutes: Open Playground

• Compare providers in the Model Marketplace: Browse Models

• Ship with the Docs: Documentation Home

Stick with Unify AI if your top priority is quality-driven model selection and evaluation loops within a more opinionated surface. Learn more: unify.ai.

Consider other tools (OpenRouter, Eden AI, LiteLLM, Portkey, Orq) when your needs skew toward breadth of general AI services, self-hosted proxies, gateway-level governance/guardrails, or orchestration-first flows. We cover each below.

What Unify AI is (and what it isn’t)

Unify AI focuses on performance-oriented routing and evaluation: benchmark models on your prompts, then steer traffic to candidates expected to produce higher-quality outputs. That’s valuable when you have measurable task quality and want repeatable improvements over time.

What Unify isn’t: a transparent provider marketplace that foregrounds per-provider price, latency, uptime, and availability before you route; nor is it primarily about multi-provider failover with user-visible provider stats. If you need those marketplace-style controls with resilience by default, ShareAI tends to be a stronger fit.

Aggregators vs. gateways vs. agent platforms (why buyers mix them up)

LLM aggregators: one API over many models/providers; marketplace views; per-request routing/failover; vendor-neutral switching without rewrites. → ShareAI sits here with a transparent marketplace and people-powered economics.

AI gateways: governance and policy at the network/app edge (plugins, rate limits, analytics, guardrails); you bring providers/models. → Portkey is a good example for enterprises that need deep traces and policy enforcement.

Agent/chatbot platforms: packaged conversational UX, memory, tools, channels; optimized for support/sales or internal assistants rather than provider-agnostic routing. → Not the main focus of this comparison, but relevant if you’re shipping customer-facing bots fast.

Many teams combine layers: a gateway for org-wide policy and a multi-provider aggregator for marketplace-informed routing and instant failover.

How we evaluated the best Unify AI alternatives

- Model breadth & neutrality: proprietary + open; easy to switch without rewrites

- Latency & resilience: routing policies, timeouts, retries, instant failover

- Governance & security: key handling, tenant/provider controls, access boundaries

- Observability: prompt/response logs, traces, cost & latency dashboards

- Pricing transparency & TCO: unit prices you can compare before routing; real-world costs under load

- Developer experience: docs, quickstarts, SDKs, playgrounds; time-to-first-token

- Community & economics: whether spend grows supply (incentives for GPU owners)

#1 — ShareAI (People-Powered AI API): the best Unify AI alternative

Why teams choose ShareAI first

With one API you can access 150+ models across many providers—no rewrites, no lock-in. The transparent marketplace lets you compare price, availability, latency, uptime, and provider type before you send traffic. Smart routing with instant failover gives resilience by default. And the economics are people-powered: 70% of every dollar flows to providers (community or company) who keep models online.

Quick links

Browse Models (Marketplace) • Open Playground • Documentation Home • Create API Key • User Guide (Console Overview) • Releases

For providers: earn by keeping models online

ShareAI is open supply. Anyone can become a provider—Community or Company—on Windows, Ubuntu, macOS, or Docker. Contribute idle-time bursts or run always-on. Choose your incentive: Rewards (earn money), Exchange (earn tokens), or Mission (donate a % to NGOs). As you scale, you can set your own inference prices and gain preferential exposure. Provider Guide

The best Unify AI alternatives (neutral snapshot)

Unify AI (reference point)

What it is: Performance-oriented routing and evaluation to choose better models per prompt.

Strengths: Quality-driven selection; benchmarking focus.

Trade-offs: Opinionated surface area; lighter on transparent marketplace views across providers.

Best for: Teams optimizing response quality with evaluation loops.

Website: unify.ai

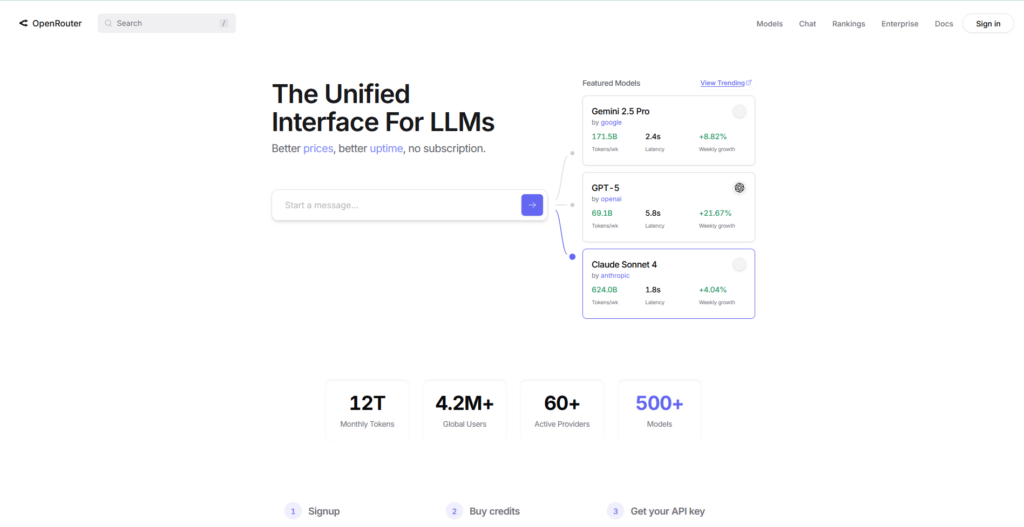

OpenRouter

What it is: Unified API over many models; familiar request/response patterns.

Strengths: Wide model access with one key; fast trials.

Trade-offs: Less emphasis on a provider marketplace view or enterprise control-plane depth.

Best for: Quick experimentation across multiple models without deep governance needs.

Eden AI

What it is: Aggregates LLMs and broader AI services (vision, translation, TTS).

Strengths: Wide multi-capability surface; caching/fallbacks; batch processing.

Trade-offs: Less focus on marketplace-visible per-provider price/latency/uptime before you route.

Best for: Teams that want LLMs plus other AI services in one place.

LiteLLM

What it is: Python SDK + self-hostable proxy that speaks OpenAI-compatible interfaces to many providers.

Strengths: Lightweight; quick to adopt; cost tracking; simple routing/fallback.

Trade-offs: You operate the proxy/observability; marketplace transparency and community economics are out of scope.

Best for: Smaller teams that prefer a DIY proxy layer.

Portkey

What it is: AI gateway with observability, guardrails, and governance—popular in regulated industries.

Strengths: Deep traces/analytics; safety controls; policy enforcement.

Trade-offs: Added operational surface; less about marketplace-style transparency across providers.

Best for: Audit-heavy, compliance-sensitive teams.

Orq AI

What it is: Orchestration and collaboration platform to move from experiments to production with low-code flows.

Strengths: Workflow orchestration; cross-functional visibility; platform analytics.

Trade-offs: Lighter on aggregation-specific features like marketplace transparency and provider economics.

Best for: Startups/SMBs that want orchestration more than deep aggregation controls.

Unify vs ShareAI vs OpenRouter vs Eden vs LiteLLM vs Portkey vs Orq (quick comparison)

| Platform | Who it serves | Model breadth | Governance & security | Observability | Routing / failover | Marketplace transparency | Pricing style | Provider program |

|---|---|---|---|---|---|---|---|---|

| ShareAI | Product/platform teams wanting one API + fair economics | 150+ models across many providers | API keys & per-route controls | Console usage + marketplace stats | Smart routing + instant failover | Yes (price, latency, uptime, availability, provider type) | Pay-per-use; compare providers | Yes — open supply; 70% to providers |

| Unify AI | Teams optimizing per-prompt quality | Multi-model | Standard API security | Platform analytics | Best-model selection | Not marketplace-first | SaaS (varies) | N/A |

| OpenRouter | Devs wanting one key across models | Wide catalog | Basic API controls | App-side | Fallback/routing | Partial | Pay-per-use | N/A |

| Eden AI | Teams needing LLM + other AI services | Broad multi-service | Standard controls | Varies | Fallbacks/caching | Partial | Pay-as-you-go | N/A |

| LiteLLM | Teams wanting self-hosted proxy | Many providers | Config/key limits | Your infra | Retries/fallback | N/A | Self-host + provider costs | N/A |

| Portkey | Regulated/enterprise teams | Broad | Governance/guardrails | Deep traces | Conditional routing | N/A | SaaS (varies) | N/A |

| Orq AI | Cross-functional product teams | Wide support | Platform controls | Platform analytics | Orchestration flows | N/A | SaaS (varies) | N/A |

Pricing & TCO: compare real costs (not just unit prices)

Teams often compare $/1K tokens and stop there. In practice, TCO depends on:

- Retries & failover during provider hiccups (affects effective token cost)

- Latency (fast models reduce user abandonment and downstream retries)

- Provider variance (spiky workloads change route economics)

- Observability storage (logs/traces for debugging & compliance)

- Evaluation tokens (when you benchmark candidates)

Simple TCO model (per month)

TCO ≈ Σ (Base_tokens × Unit_price × (1 + Retry_rate))

+ Observability_storage

+ Evaluation_tokens

+ Egress

Patterns that lower TCO in production

- Use marketplace stats to select providers by price + latency + uptime.

- Set per-provider timeouts, backup models, and instant failover.

- Run parallel candidates and return the first successful to shrink tail latency.

- Preflight max tokens and guard price per call to avoid runaway costs.

- Keep an eye on availability; route away from saturating providers.

Migration guide: moving to ShareAI from Unify (and others)

From Unify AI

Keep your evaluation workflows where useful. For production routes where marketplace transparency and instant failover matter, map model names, validate prompt parity, shadow 10% of traffic through ShareAI, monitor latency/error budgets, then step up to 25% → 50% → 100%.

From OpenRouter

Map model names; validate schema/fields; compare providers in the marketplace; switch per route. Marketplace data makes swaps straightforward.

From LiteLLM

Replace self-hosted proxy on production routes you don’t want to operate; keep LiteLLM for dev if desired. Trade proxy ops for managed routing + marketplace visibility.

From Portkey / Orq

Define feature-parity expectations (analytics, guardrails, orchestration). Many teams run a hybrid: keep specialized features where they’re strongest, use ShareAI for transparent provider choice and failover.

Security, privacy & compliance checklist (vendor-agnostic)

- Key handling: rotation cadence; minimal scopes; environment separation

- Data retention: where prompts/responses are stored and for how long; redaction options

- PII & sensitive content: masking, access controls, regional routing for data locality

- Observability: prompt/response logs, filters, pseudonymization for oncall & audits

- Incident response: escalation paths and provider SLAs

- Provider controls: per-provider routing boundaries; allow/deny by model family

Copy-paste API examples (Chat Completions)

Prerequisite: create a key in Console → Create API Key

cURL (bash)

#!/usr/bin/env bash

# Set your API key

export SHAREAI_API_KEY="YOUR_KEY"

# Chat Completions

curl -X POST "https://api.shareai.now/v1/chat/completions" \

-H "Authorization: Bearer $SHAREAI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "llama-3.1-70b",

"messages": [

{ "role": "user", "content": "Give me a short haiku about reliable routing." }

],

"temperature": 0.4,

"max_tokens": 128

}'

JavaScript (fetch) — Node 18+/Edge runtimes

// Set your API key in an environment variable

// process.env.SHAREAI_API_KEY = "YOUR_KEY"

async function main() {

const res = await fetch("https://api.shareai.now/v1/chat/completions", {

method: "POST",

headers: {

"Authorization": `Bearer ${process.env.SHAREAI_API_KEY}`,

"Content-Type": "application/json"

},

body: JSON.stringify({

model: "llama-3.1-70b",

messages: [

{ role: "user", content: "Give me a short haiku about reliable routing." }

],

temperature: 0.4,

max_tokens: 128

})

});

if (!res.ok) {

console.error("Request failed:", res.status, await res.text());

return;

}

const data = await res.json();

console.log(JSON.stringify(data, null, 2));

}

main().catch(console.error);

FAQ — Unify AI vs. each alternative (and where ShareAI fits)

Unify AI vs ShareAI — which for multi-provider routing and resilience?

Choose ShareAI. You get one API across 150+ models, marketplace-visible price/latency/uptime/availability before routing, and instant failover that protects UX under load. Unify focuses on evaluation-led model selection; ShareAI emphasizes transparent provider choice and resilience—plus 70% of spend returns to providers who keep models online. → Try it live: Open Playground

Unify AI vs OpenRouter — what’s the difference, and when does ShareAI win?

OpenRouter offers one-key access to many models for quick trials. Unify emphasizes quality-driven selection. If you need marketplace transparency, per-provider comparisons, and automatic failover, ShareAI is the better choice for production routes.

Unify AI vs Eden AI — which for broader AI services?

Eden spans LLMs plus other AI services. Unify focuses on model quality selection. If your priority is cross-provider LLM routing with visible pricing and latency and instant failover, ShareAI balances speed to value with production-grade resilience.

Unify AI vs LiteLLM — DIY proxy or evaluation-led selection?

LiteLLM is great if you want a self-hosted proxy. Unify is for quality-driven model selection. If you’d rather not operate a proxy and want marketplace-first routing + failover and a provider economy, pick ShareAI.

Unify AI vs Portkey — governance or selection?

Portkey is an AI gateway: guardrails, policies, deep traces. Unify is about selecting better models per prompt. If you need routing across providers with transparent price/latency/uptime and instant failover, ShareAI is the aggregator to pair with (you can even use a gateway + ShareAI together).

Unify AI vs Orq AI — orchestration or selection?

Orq centers on workflow orchestration and collaboration. Unify does evaluation-led model choice. For marketplace-visible provider selection and failover in production, ShareAI delivers the aggregator layer your orchestration can call.

Unify AI vs Kong AI Gateway — infra control plane vs evaluation-led routing

Kong AI Gateway is an edge control plane (policies, plugins, analytics). Unify focuses on quality-led selection. If your need is multi-provider routing + instant failover with price/latency visibility before routing, ShareAI is the purpose-built aggregator; you can keep gateway policies alongside it.

Developer experience that ships

Time-to-first-token matters. The fastest path: Open the Playground → run a live request in minutes; Create your API key; ship with the Docs; track platform progress in Releases.

Prompt patterns worth testing

• Set per-provider timeouts; define backup models; enable instant failover.

• Run parallel candidates and accept the first success to cut P95/P99.

• Request structured JSON outputs and validate on receipt.

• Guard price per call via max tokens and route selection.

• Re-evaluate model choices monthly; marketplace stats surface new options.

Conclusion: pick the right alternative for your stage

Choose ShareAI when you want one API across many providers, an openly visible marketplace, and resilience by default—while supporting the people who keep models online (70% of spend goes to providers). Choose Unify AI when evaluation-led model selection is your top priority. For specific needs, Eden AI, OpenRouter, LiteLLM, Portkey, and Orq each bring useful strengths—use the comparison above to match them to your constraints.

Start now: Open Playground • Create API Key • Read the Docs