Local AI Models (LLMs)

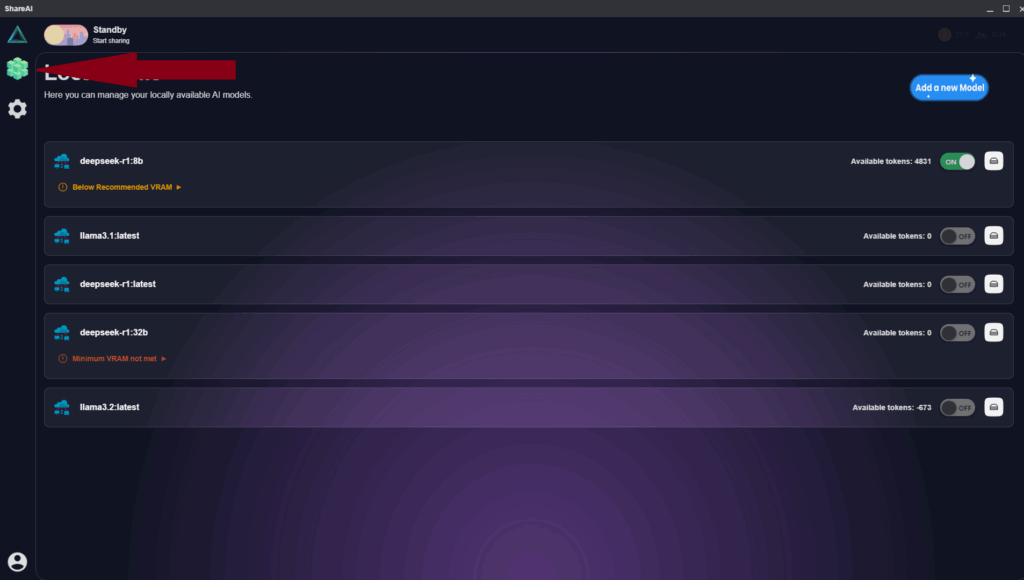

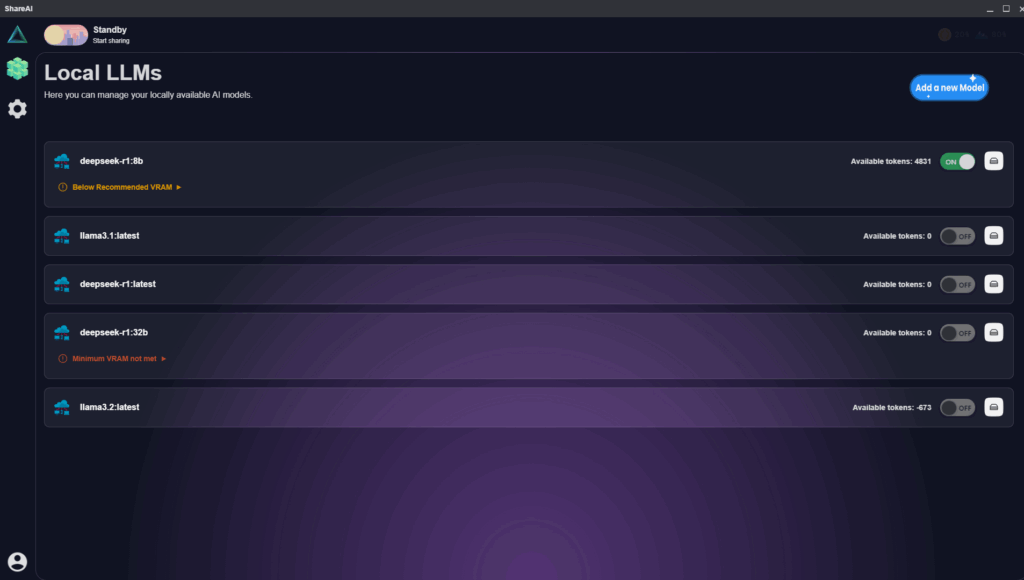

The AI Models (LLMs) page allows you to manage and configure the AI models stored locally on your device. Here, you can choose which models you make available for sharing, check token balances, and remove models you no longer need.

Managing Your AI Models

Each AI model listed on this page represents a model currently installed on your device.

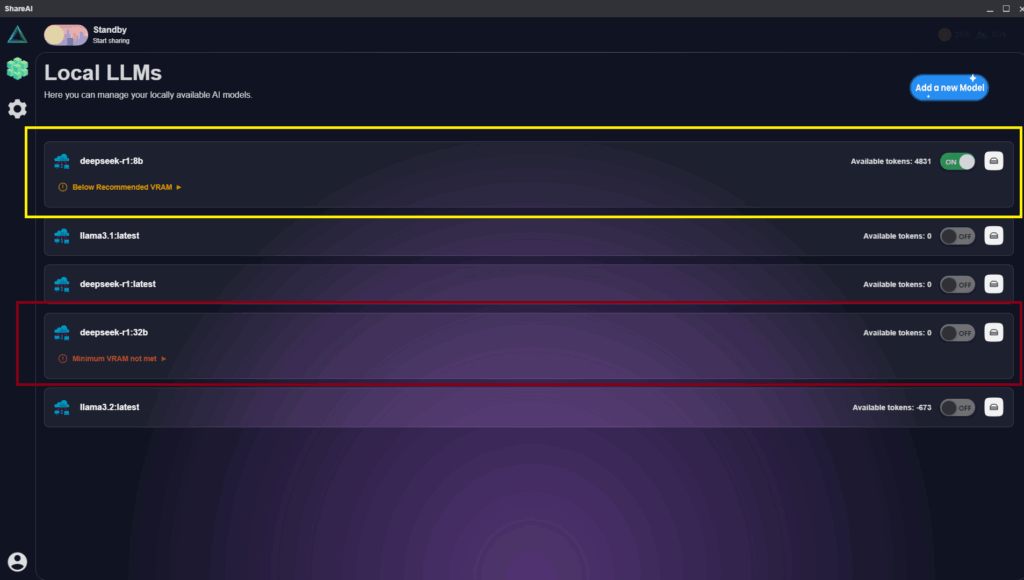

- Toggle ON/OFF Switch:

- Use this toggle to enable or disable an AI model for sharing within the ShareAI network.

- When toggled ON, your device makes the model available to other users, and you can earn tokens for tasks processed using this model.

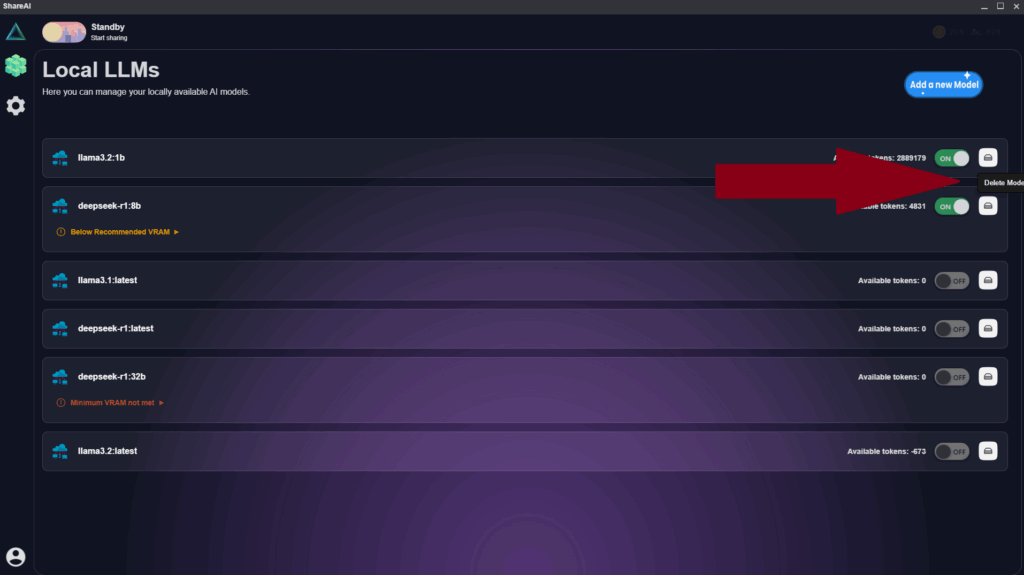

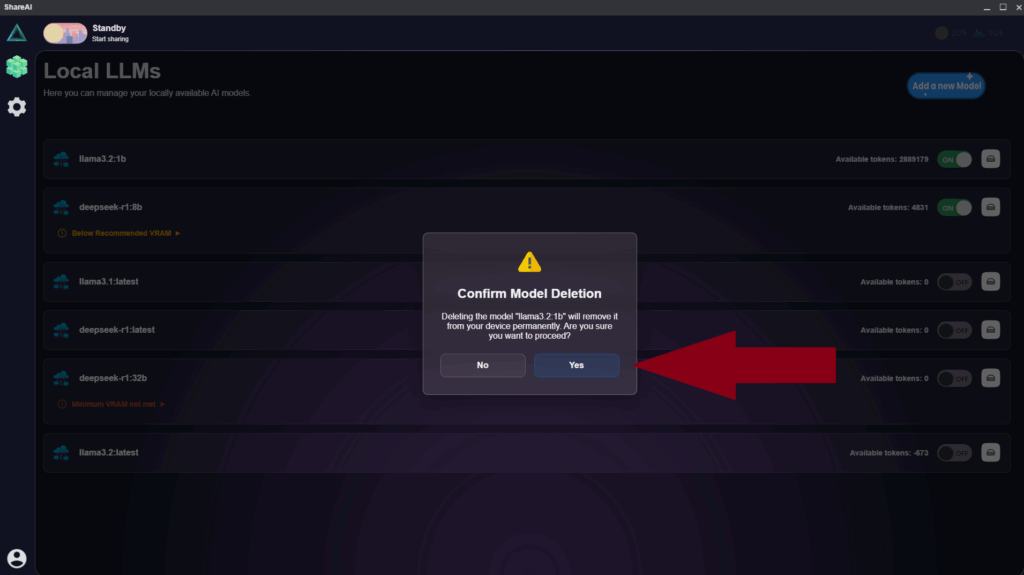

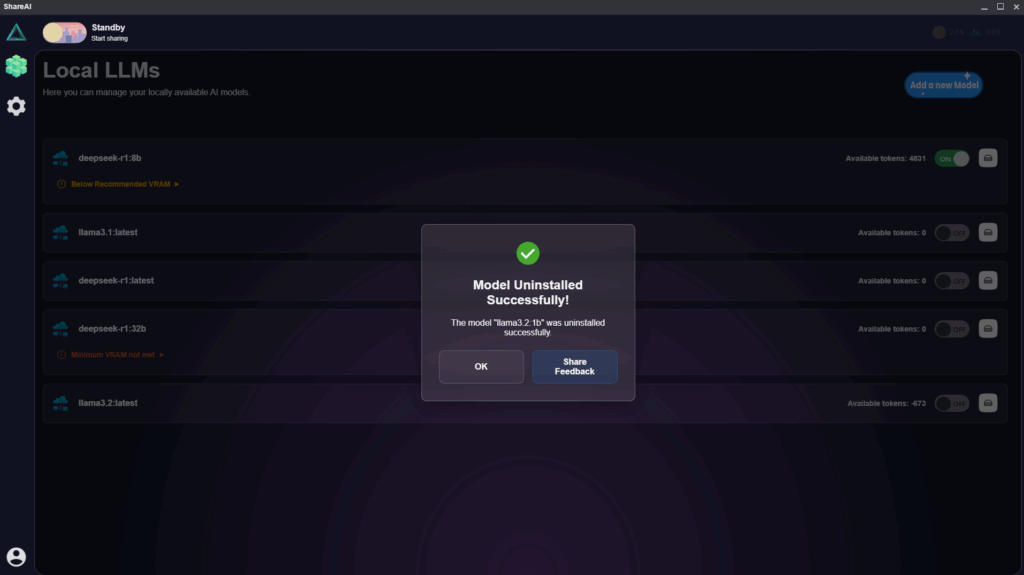

Deleting AI Models

To remove an AI model from your device:

- Click the Delete Model button next to the toggle switch.

- Confirm your action when prompted.

Note:

- Deleting an AI model permanently removes it from your device. If you need this model again later, you’ll have to reinstall it.

- Ensure the model is toggled OFF before attempting deletion to avoid any interruptions.

VRAM Warnings Explained

- Below Recommended VRAM: This warning indicates that the AI model will function but your GPU VRAM is lower than optimal for best performance.

- Minimum VRAM Not Met: You cannot activate or share this model because your device lacks sufficient GPU VRAM.

Token Balance

Each model displays the current balance of tokens you’ve earned by sharing that model within the network. If your balance is negative, this indicates recent tasks processed without sufficient tokens, typically due to “Priority over my own Device” settings.

Recommendations

- Keep multiple models active to enhance your contribution and improve the chance of receiving tasks.

- Regularly check model performance and VRAM requirements to ensure optimal device performance and token generation.