Best Moonshot AI Kimi K2.5 alternatives for startups & developers in 2026 (and how to swap models fast with one ShareAI gateway)

Moonshot AI’s Kimi K2.5 is one of those releases that instantly changes the vibe in open models: multimodal, agentic, long-context, and genuinely useful for “real work” workflows. If you’re researching Kimi K2.5 alternatives, you’re probably not questioning its power—you’re questioning fit.

The best alternative depends on what you’re shipping: a coding agent, a long-document analyst, a tool-using research bot, or a production feature where reliability and cost predictability matter more than raw specs. And because model pricing and quality can change quickly, the long-term win is keeping your product model-switchable—not locked to a single vendor or model.

This guide covers the strongest Kimi K2.5 alternatives for startups and developers, plus how to swap models easily via a single AI gateway like ShareAI.

Quick comparison of Kimi K2.5 alternatives

Here’s a practical shortlist, organized by what teams usually need in production. Think of this as your “try these first” map.

| Option | Best for | Why teams pick it over Kimi K2.5 | Tradeoffs |

|---|---|---|---|

| DeepSeek-V3.2 | Reasoning + agents on a budget | Reasoning-first focus with agent-friendly modes | You still need evals; behavior varies by configuration |

| GLM-4.7 | Agent workflows + UI generation | Strong “spec → UI” tendencies and multi-step workflow reliability | Ecosystem maturity varies by stack/provider |

| Devstral 2 | Code agents / SWE workflows | Specialized for repo-aware software engineering tasks | Narrower focus than generalist models |

| Claude Opus 4.5 | High-stakes reasoning + coding | Premium reliability and strong performance for critical work | Higher cost; closed model constraints |

| Grok 4.1 Fast | Massive context + tool-calling | Designed around ultra-long context and agent tooling | Closed model; style/voice fit may vary |

| ShareAI (gateway) | Staying model-agnostic | One API to many models; swap models without rewrites | Not a model itself—an infrastructure layer |

What is Moonshot AI’s Kimi K2.5?

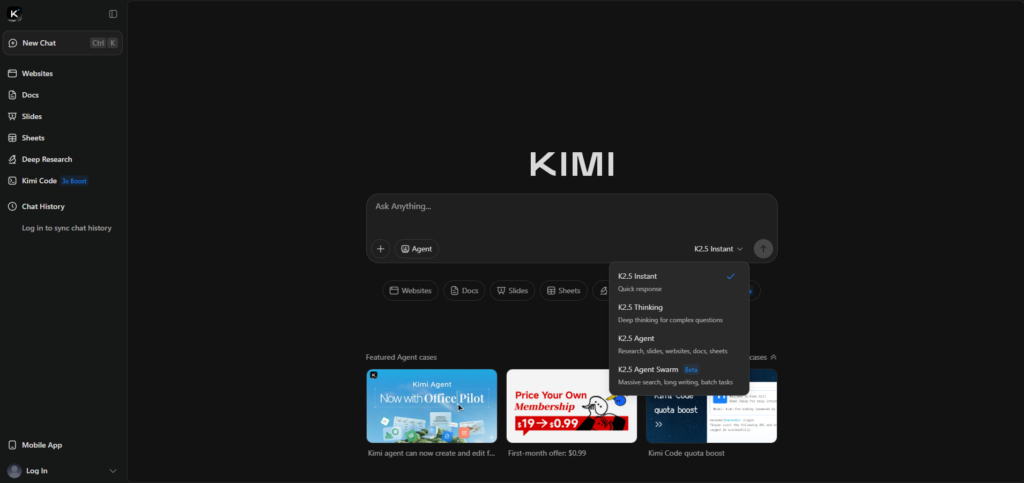

Kimi K2.5 is a flagship model from Moonshot AI, marketed as “open source,” with an emphasis on multimodal reasoning and agentic execution. The official release page highlights multimodal inputs (including video) and “Agent Swarm” style parallelization for complex tasks.

If you want the official feature list and release context, start here: Kimi K2.5 (Moonshot AI).

Why people look for Kimi K2.5 alternatives

Most teams don’t switch because Kimi is “bad.” They switch because constraints change once you go from demo to production.

- You need the best coding reliability for multi-file changes, bug-fixing, or repo-aware workflows.

- You need huge context (contracts, knowledge bases, repos) without brittle chunking strategies.

- You want lower variance for critical, customer-facing, or regulated workflows.

- You don’t want lock-in—you want to keep leverage when pricing, limits, or quality shifts.

Open-weight alternatives (maximum control)

DeepSeek-V3.2 (reasoning + agent workflows)

DeepSeek-V3.2 is a strong pick when you want a “reasoning-first” model for technical tasks and agent pipelines, especially if you’re cost-sensitive. It’s often used as a reliable daily-driver model for structured thinking and tool-use patterns.

Reference: DeepSeek API release notes.

GLM-4.7 (agentic workflows + UI generation)

GLM-4.7 is worth testing if your product overlaps with Kimi’s “visual-to-code” and workflow execution angle. Teams often evaluate it for multi-step agent behavior and UI/front-end generation reliability.

Reference: GLM-4.7 docs.

Devstral 2 (software engineering agents)

If your main requirement is end-to-end software engineering—multi-file edits, repo navigation, test fixing—Devstral 2 is positioned as a specialist. It’s a strong Kimi K2.5 alternative when “coding agent” is the core job, not multimodal generalism.

Reference: Mistral Devstral 2 announcement.

Closed models (frontier performance + enterprise posture)

Claude Opus 4.5 (high-stakes reasoning/coding)

Claude Opus 4.5 is a common “pay for reliability” choice when correctness matters more than cost. If your workload is sensitive to subtle reasoning errors or coding mistakes, it’s one of the strongest premium alternatives to Moonshot AI’s Kimi K2.5.

Reference: Anthropic: Claude Opus 4.5.

Massive-context + real-time tool alternatives

Grok 4.1 Fast (ultra-long context + tools)

Grok 4.1 Fast is notable for one reason: it’s built around extremely long context and agent tooling. If you have “read everything first” workflows (big repos, large doc sets), it can be a compelling alternative category to test alongside Kimi K2.5.

Reference: xAI: Grok 4.1 Fast.

The startup “cheat code”: don’t bet the product on one model

Even if Kimi K2.5 is your favorite today, building your product so it can switch models later is the best long-term engineering decision. Pricing shifts, outages happen, rate limits appear, and sometimes models regress.

A simple, durable pattern is: choose a default model for the common path, a specialist model for hard requests (coding agent or massive context), and a fallback model for reliability. This is exactly what an AI gateway should make easy.

How ShareAI makes Kimi K2.5 and its alternatives interchangeable

ShareAI is built for model optionality: one OpenAI-compatible API across a broad catalog, so you can compare and route models without rewriting integrations. Start with the Model Marketplace, test prompts in the Playground, and integrate via the API Reference.

If you’re onboarding a team, the Console overview is a fast orientation. For production planning, keep an eye on Release Notes and the Provider Guide.

Example: swap the model field (no rewrite)

This is the core advantage of a single AI gateway: your app keeps the same request shape, and you switch models by changing one field. First, create a key in Console: Create API Key.

curl -s "https://api.shareai.now/api/v1/chat/completions" \

-H "Authorization: Bearer $SHAREAI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "deepseek-r1:70b",

"messages": [

{

"role": "user",

"content": "Summarize this spec and list edge cases."

}

],

"temperature": 0.2,

"stream": false

}'Now swap just the model name (everything else stays the same):

curl -s "https://api.shareai.now/api/v1/chat/completions" \

-H "Authorization: Bearer $SHAREAI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "llama-3.1-70b",

"messages": [

{

"role": "user",

"content": "Summarize this spec and list edge cases."

}

],

"temperature": 0.2,

"stream": false

}'In a Kimi K2.5 alternatives workflow, this lets you run quick bake-offs, add fallbacks, and keep leverage as the model landscape shifts.

How to choose the right Kimi K2.5 alternative in 30 minutes

- Define the job (code agent fixes tests, RAG answers from internal docs, contract analysis, UI-to-code).

- Create a small eval set (10–30 prompts), including failure cases and edge cases.

- Test 3–5 candidates (Kimi K2.5 + two specialists + one cheap fallback) and score for correctness, formatting reliability, tool-use accuracy, and latency.

- Ship with a fallback so outages, limits, and regressions don’t become user-facing incidents.

If you want a clean starting point for setup and best practices, bookmark the ShareAI Documentation and the API quickstart.

FAQ

Is Kimi K2.5 open source or open-weight?

Moonshot AI markets Kimi K2.5 as “open source” and links to public availability via common OSS distribution channels. In practice, many teams use the term open-weight to be precise: weights are available, but licensing and the full training stack may differ from “classic” open-source software norms.

Reference: Kimi K2.5 official page.

When should I choose Kimi K2.5 over alternatives?

Choose Kimi K2.5 when your workload is heavily multimodal (including video), agentic, and benefits from the model’s “swarm” approach to decomposing large tasks. If you’re building UI-from-visual workflows, it’s also a natural place to start.

Which alternative is best for coding agents vs general coding?

If you’re building a repo-aware agent that edits multiple files, runs tests, and iterates, start with Devstral 2. If you want premium “best effort” reliability for complex coding, Claude Opus 4.5 is a common benchmark pick—especially for critical paths.

Which alternative is best for long documents and huge context?

For “read everything first” workflows, Grok 4.1 Fast is in the massive-context bucket. That said, many products do better with RAG plus a smaller context window, so test both approaches instead of assuming bigger context always wins.

How do I compare models fairly?

Use the same prompt set, grading rubric, and settings (temperature, max tokens, formatting rules). Grade per task: correctness, format/JSON reliability, tool accuracy, latency, and cost per successful outcome.

What’s the fastest way to A/B test Kimi K2.5 alternatives without rebuilding my app?

Standardize on one API interface and swap the model field. Using a gateway like ShareAI, you can compare candidates in the Playground and then ship the same request shape via the API.

Can I route by “cheapest” or “fastest”?

That’s the idea behind policy-based routing: choose a model based on constraints like cost ceiling, latency target, or task type. Even if you start simple (manual model selection), building toward routing policies keeps you flexible as providers and models evolve.

How do fallback models help in production?

Fallbacks protect you from transient failures, provider rate limits, regional issues, and model regressions. A fallback strategy often matters more to user experience than chasing the single “best” model on paper.

How do I control costs?

Use a cheap default model for the common path, cap output tokens, and reserve premium models for requests that truly need them. Track cost per successful outcome, not just cost per token.

Do I need to self-host for privacy or compliance?

Not always. It depends on your data classification, residency needs, and vendor terms. Start with policy (what data can be sent where), then pick the deployment approach that matches it.

What tasks still benefit from open-weight self-hosting?

Common reasons include data locality, predictable latency, deep customization, and tight integration with internal tooling and guardrails. If those are your constraints, open-weight models can be a strong foundation—if you’re ready to own the ops.

What if model behavior changes over time?

Assume it will. Keep a regression eval set, monitor quality drift, and make sure you can roll back fast by switching models or providers.

Wrap-up: pick the best model today, keep the ability to switch tomorrow

Kimi K2.5 is a serious model from Moonshot AI, and for many teams it’s an excellent baseline. But the most production-friendly approach is choosing the best model for each job—and keeping the ability to switch when the landscape changes.

If you want that flexibility without constant reintegration work, start by browsing the Models marketplace, testing in the Playground, and creating your account via Sign in / Sign up. ::contentReference[oaicite:0]{index=0}